Key Takeaways

Energy companies for AI data centers must deliver gigawatt-scale power solutions as global demand surges to 945 terawatt-hours by 2030.

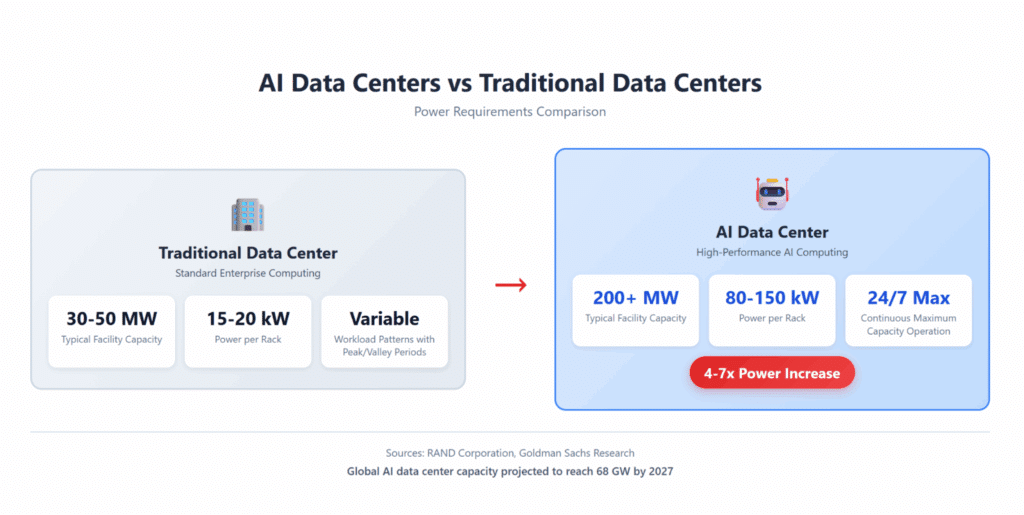

- AI facilities require 200+ megawatts versus 30 megawatts for traditional data centers, creating unprecedented infrastructure challenges

- Leading energy providers combine renewable generation, grid interconnection expertise, and rapid deployment timelines (18-24 months)

- Successful partnerships prioritize scalability, sustainability commitments, and proven technical capability in high-density environments

- Behind-the-meter solutions and hybrid energy strategies are becoming essential as grid connection delays stretch to five years

Organizations selecting the wrong energy partner risk costly project delays that could compromise their competitive position in the AI race.

What Makes Energy Companies for AI Data Centers Different?

The artificial intelligence boom has fundamentally transformed data center energy requirements. Energy companies for AI data centers now face entirely different challenges than traditional power providers, with hyperscale facilities demanding unprecedented capacity and reliability.

AI data centers consume exponentially more electricity than their predecessors. Where conventional facilities might draw 30 megawatts of power, modern AI campuses require 200 megawatts or more. According to RAND Corporation research, global AI data centers could need 68 gigawatts of power capacity by 2027, nearly equivalent to California’s entire electricity infrastructure.

This massive power appetite stems from the computational intensity of artificial intelligence workloads. Training sophisticated large language models demands thousands of specialized graphics processing units operating simultaneously for extended periods. The operational profile of AI infrastructure differs substantially from traditional computing that experiences predictable usage patterns with peak and off-peak periods.

These energy providers face unique technical challenges specific to AI workloads. AI workloads generate extreme heat concentrations, with individual equipment racks pulling 80-150 kilowatts compared to 15-20 kilowatts in traditional environments. These densities push electrical distribution systems to their absolute limits and demand sophisticated thermal management alongside power delivery.

Why Are Companies Racing to Secure AI Data Center Power?

The stakes in the AI infrastructure race couldn’t be higher. Major technology companies have committed unprecedented capital to secure energy capacity. According to recent industry reports, leading hyperscalers are investing tens of billions of dollars in AI data center infrastructure expansion, with some companies allocating more than $80 billion for development through 2025.

This investment frenzy reflects a fundamental reality: power availability has become the primary constraint limiting AI development. Grid connection delays now stretch to five years for new data center projects in many markets. Organizations that cannot secure adequate power face the stark choice of either delaying their AI initiatives or relocating infrastructure to regions with better energy access.

The competitive implications are massive. Companies that secure reliable power capacity today position themselves to scale AI capabilities rapidly, while those without adequate energy partnerships risk falling behind as compute demands accelerate. This dynamic has transformed energy procurement from an operational consideration into a strategic imperative that directly impacts market position.

Geographic concentration intensifies these pressures. Nearly half of all U.S. data center capacity clusters in just five regional areas, creating intense competition for limited grid resources. Traditional utility infrastructure was never designed to handle the rapid deployment of facilities requiring hundreds of megawatts of continuous power in concentrated locations.

How Do Energy Companies Deliver Power at AI Scale?

Grid-Connected Solutions

Traditional utility partnerships remain important for many AI data center developments, particularly in regions with robust transmission infrastructure. These arrangements typically involve power purchase agreements where data center operators contract for specific capacity allocations from regional grids.

Grid connections offer certain advantages, including access to diverse generation sources and existing transmission networks. However, they also present significant challenges in the AI era. The interconnection queue for new large-scale power users has grown dramatically, with some facilities facing wait times exceeding five years before they can receive adequate electricity.

Utilities serving AI data centers must upgrade transformers, distribution systems, and sometimes entire transmission corridors to handle the concentrated loads these facilities create. In Northern Virginia, utilities have temporarily paused new connections in some areas to address grid stability concerns caused by rapid data center expansion.

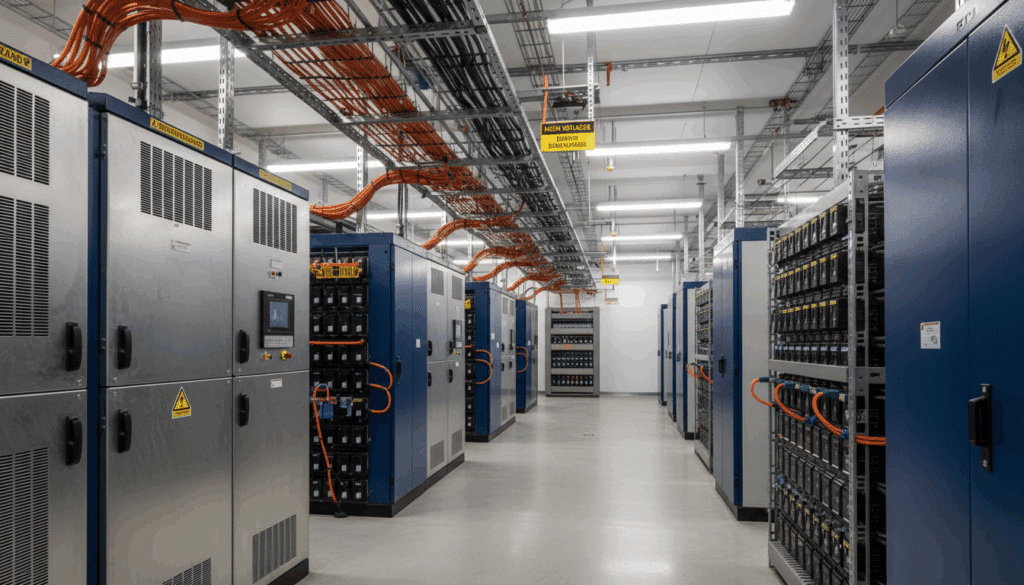

Behind-the-Meter Power Generation

Increasingly, leading energy providers are deploying behind-the-meter solutions that generate power directly on-site or immediately adjacent to computing facilities. This approach dramatically reduces interconnection timelines while providing greater energy independence and reliability.

Natural gas has emerged as a leading option for behind-the-meter generation. Major energy partnerships announced in 2025 include Chevron’s collaboration with GE Vernova to deliver up to four gigawatts of natural gas-powered generation specifically for AI data centers. These projects can become operational within 18-24 months, compared to the multi-year timelines required for grid connections.

Behind-the-meter systems offer additional benefits beyond speed. They eliminate exposure to wholesale electricity price volatility and provide resilience against grid outages that could interrupt mission-critical AI operations. For hyperscale operators running continuous training workloads, this reliability justification alone often warrants the infrastructure investment.

Hybrid and Renewable Integration

Sophisticated AI data center energy providers are developing hybrid solutions that combine multiple generation sources to balance reliability, sustainability, and cost-effectiveness with scalable power solutions that grow alongside computing demands. These systems might pair solar or wind generation with battery storage and backup natural gas capacity to ensure uninterrupted power supply regardless of weather conditions or time of day.

According to Goldman Sachs Research, global data center power consumption is projected to increase 165% by 2030, driven primarily by AI workloads. This integrated infrastructure development model recognizes that renewable energy for AI data centers requires more than simply purchasing renewable energy credits—it demands physical infrastructure designed holistically from the ground up.

Energy storage technologies play increasingly critical roles in these hybrid systems. Advanced battery installations can smooth intermittency from renewable sources while providing backup capacity during grid disturbances. Some AI data center energy providers are exploring hydrogen storage as a longer-duration backup option that can support extended outages without emissions.

What Are the Leading Energy Company Categories?

Traditional Utility Providers

Established electric utilities continue serving AI data center markets, particularly where existing grid infrastructure can accommodate additional capacity. These traditional providers offer deep operational experience, regulatory expertise, and established relationships with state and local authorities that can streamline permitting processes.

However, utility timelines often don’t align with the urgency AI companies face. Regulated utilities must secure approvals from public utility commissions for major investments, conduct lengthy environmental reviews, and coordinate with multiple stakeholders before breaking ground. This measured pace conflicts with the compressed development schedules that characterize AI infrastructure deployment.

Integrated Energy Developers

A newer category of integrated energy developers has emerged specifically to address AI data center requirements and the limitations of traditional utility approaches. These integrated developers combine land acquisition, power plant development, and transmission buildout into comprehensive solutions tailored for high-density computing facilities.

These companies identify suitable sites with advantageous characteristics like proximity to existing transmission infrastructure, favorable regulatory environments, and access to fuel sources or renewable resources. They then develop turnkey energy campuses that co-locate power generation with data center infrastructure, eliminating many of the bottlenecks that plague conventional development approaches.

The integrated model offers compelling advantages for hyperscale operators. By bundling site selection, power development, and infrastructure delivery, these energy partners dramatically compress project timelines while providing greater cost certainty.

Natural Gas and Oil Majors

Large energy companies with extensive natural gas assets have recognized AI data centers as strategic growth opportunities, positioning themselves as hyperscale compute energy specialists. Major energy firms are leveraging their fuel supply networks, technical expertise, and financial resources to develop dedicated data center power solutions.

These companies bring significant advantages to AI infrastructure development. Their experience operating complex energy systems translates well to the high-reliability requirements of computing facilities. Their access to natural gas supplies enables them to offer integrated fuel and generation solutions with favorable economics and rapid deployment timelines.

Natural gas generation provides the continuous, high-capacity power that AI workloads demand. Modern combined-cycle gas turbines achieve impressive efficiency levels while offering flexibility to integrate carbon capture systems that can reduce emissions by more than 90 percent.

Renewable Energy Specialists

Specialized renewable energy companies are developing innovative approaches to power AI data centers sustainably. These firms focus on integrating solar, wind, and energy storage technologies specifically optimized for the unique demands of artificial intelligence computing.

According to Fortune reporting, companies are building solar energy systems designed to power AI data centers around the clock through advanced storage that can dispatch solar energy during nighttime hours. This addresses one of the fundamental challenges of renewable energy—intermittency—that has historically made solar and wind difficult foundations for mission-critical computing infrastructure.

Other renewable specialists focus on emerging technologies like enhanced geothermal systems that can deliver continuous baseload power without emissions. Next-generation geothermal can provide gigawatt-scale capacity suitable for large AI campuses.

Nuclear Power Providers

Nuclear energy has attracted renewed attention as a potential solution for AI data center power requirements. Both established nuclear operators and emerging small modular reactor developers are positioning themselves as AI data center energy providers offering carbon-free baseload electricity with decades of price stability.

Emerging nuclear technologies hold particular promise for AI applications. Compact reactors capable of producing up to 75 megawatts continuously could power individual AI campuses with dedicated, on-site nuclear generation that eliminates transmission losses and grid dependencies.

How Should Organizations Evaluate AI Data Center Energy Providers?

Technical Capability Assessment

Evaluating potential energy partners for AI data centers begins with rigorous technical assessment of their ability to deliver scalable power solutions. Organizations must verify that potential partners can actually deliver the power densities, reliability levels, and scalability required for high-performance computing workloads.

Critical technical considerations include electrical distribution architecture capable of handling 80-150 kilowatt rack densities, redundant power delivery systems that eliminate single points of failure, and cooling integration that works seamlessly with electrical infrastructure. The best energy companies for AI data centers implement sophisticated power conditioning and monitoring systems to maintain extremely tight tolerances.

Power quality matters tremendously for AI workloads. Voltage fluctuations, harmonic distortions, or frequency variations that might be tolerable in other applications can cause computing errors or hardware damage in GPU clusters.

Deployment Timeline and Scalability

Speed to market often determines competitive outcomes in AI infrastructure races. Organizations should carefully evaluate energy partners’ ability to deliver operational capacity within compressed timeframes while maintaining flexibility for future expansion.

Behind-the-meter solutions typically offer the fastest deployment paths, with natural gas installations potentially operational within 18-24 months. Grid-connected projects require longer timelines but might provide easier scalability if transmission infrastructure exists. Renewable projects fall somewhere between these extremes, with timelines varying based on storage requirements and regulatory environments.

Scalability provisions prove equally important. Initial AI deployments frequently expand as models grow more sophisticated and usage scales. Energy agreements should include clear frameworks for adding capacity without requiring complete renegotiation or starting from scratch with permitting and construction.

Sustainability and ESG Alignment

Environmental commitments have become non-negotiable for many organizations deploying AI infrastructure. Major technology companies have pledged to achieve carbon neutrality or operate on 100 percent renewable energy, creating requirements that energy partners must satisfy.

Evaluating sustainability credentials requires looking beyond marketing claims to examine actual generation sources, emissions profiles, and renewable energy certificates. Some arrangements that technically qualify as “renewable powered” through REC purchases still rely on fossil generation for actual electrons delivered to facilities.

Progressive AI data center energy providers are developing integrated sustainability solutions that deliver genuine emissions reductions. These might include direct renewable generation, energy storage systems that enable higher renewable penetration, or natural gas with carbon capture that achieves near-zero emissions while maintaining reliability.

Financial Stability and Risk Allocation

Energy partnerships for AI data centers represent massive, long-term commitments involving hundreds of millions or billions of dollars in infrastructure investment. Organizations must assess potential partners’ financial capacity to execute these projects and remain viable throughout multi-decade operational periods.

Financial evaluation should examine partners’ balance sheets, access to capital markets, and track records completing similar projects. Companies backed by established energy majors, infrastructure funds, or other well-capitalized sponsors generally present lower execution risk than startups dependent on single financing sources.

Key Trends Reshaping Energy Companies for AI Data Centers

Geographic Diversification Beyond Traditional Hubs

Power constraints in established data center markets are driving geographic diversification toward regions with available energy capacity. States like Utah, South Dakota, and Wyoming are attracting AI infrastructure investment based on renewable resources, affordable power costs, and less congested grid systems.

This geographic shift creates opportunities for regional utilities and energy developers that might have previously played limited roles in data center markets. Local renewable resources, proximity to stranded power capacity, and state economic development incentives combine to make secondary markets increasingly attractive for scalable power solutions.

Integration of Advanced Cooling with Power Systems

Energy companies are increasingly integrating sophisticated cooling technologies directly with power infrastructure rather than treating them as separate systems. This holistic approach recognizes that thermal management consumes 30-40 percent of AI data center electricity and represents a critical component of overall energy strategy.

Direct liquid cooling systems that use water or specialized fluids to remove heat from processors offer dramatic efficiency improvements over traditional air cooling. These systems reduce energy consumption while enabling higher rack densities that improve computing efficiency.

Emergence of AI-Specific Power Standards

Industry organizations are working to develop standards and best practices specifically addressing AI data center energy requirements. These efforts aim to create common frameworks for measuring efficiency, defining reliability requirements, and establishing sustainability benchmarks appropriate for high-density computing environments.

Power usage effectiveness metrics traditionally used to evaluate data center efficiency don’t capture all relevant factors in AI environments. New approaches attempt to account for the full energy footprint including cooling, power distribution, and specialized infrastructure required for GPU clusters.

Getting Started with AI Data Center Energy Planning

Organizations beginning the journey toward AI infrastructure deployment should start energy planning early in their project development process. Power availability increasingly determines site selection and development feasibility, making energy strategy a foundational consideration rather than an operational detail to address after other decisions.

Begin by comprehensively assessing your power requirements across different timeframes. Initial capacity needs might differ substantially from anticipated demands three to five years out as AI capabilities scale. Understanding this growth trajectory enables you to select energy partners and negotiate agreements that accommodate expansion without requiring complete renegotiation.

Conduct thorough market research on available energy options in your target geographies. Power availability, regulatory environments, and local energy economics vary dramatically across regions. Some markets offer rapid grid connections while others face multi-year queues. This market intelligence should inform both site selection and energy strategy.

Engage with multiple potential energy partners to understand the full range of available approaches. Traditional utilities, integrated developers, and specialized renewable providers each bring different capabilities and solve different problems. Evaluating diverse options helps you identify the optimal solution for your specific requirements.

Frequently Asked Questions

How much power does an AI data center require compared to traditional facilities?

AI data centers typically require 200+ megawatts of power capacity compared to 30-50 megawatts for traditional enterprise data centers. This 4-7x increase stems from the massive GPU clusters required for AI model training and inference, which generate much higher power densities (80-150 kW per rack versus 15-20 kW for traditional servers). The continuous, 24/7 nature of AI workloads also eliminates the usage valleys that exist in traditional computing environments.

What energy sources are most common for powering AI data centers?

Natural gas currently leads as the most deployed energy source for new AI data centers due to its ability to provide reliable, continuous power with relatively rapid deployment timelines of 18-24 months. Renewable sources including solar and wind are increasingly important, particularly when combined with battery storage systems that address intermittency. Nuclear power is gaining renewed interest for its carbon-free baseload capacity, with both existing plants and emerging small modular reactor technologies attracting significant investment.

Why is behind-the-meter power generation becoming popular for AI facilities?

Behind-the-meter generation offers AI data centers several critical advantages. First, deployment timelines compress dramatically compared to grid connections that can require five years or more for interconnection approval and infrastructure buildout. Second, behind-the-meter systems provide energy independence and resilience against grid outages that could interrupt mission-critical AI training operations worth millions of dollars. Third, these solutions eliminate exposure to wholesale electricity price volatility while providing greater control over power quality and reliability.

How do energy companies for AI data centers address sustainability requirements?

Leading energy companies for AI data centers are developing hybrid solutions that balance reliability with environmental responsibility. These approaches might include direct renewable generation through on-site solar or wind facilities, power purchase agreements for off-site renewable projects, integration of energy storage systems that enable higher renewable penetration, or natural gas generation paired with carbon capture technology that can reduce emissions by more than 90 percent. The most sophisticated providers create integrated energy campuses where renewable generation co-locates with computing infrastructure for maximum efficiency.

What should organizations prioritize when selecting AI data center energy providers?

Organizations should prioritize several critical factors when evaluating potential energy partners. Technical capability to deliver high-density power infrastructure with 80-150 kW per rack capacity is foundational. Deployment timelines that align with competitive requirements typically favor behind-the-meter or integrated development approaches over traditional grid connections. Financial stability ensures partners can complete massive infrastructure investments and remain viable throughout multi-decade operational periods. Finally, sustainability alignment with corporate environmental commitments increasingly determines which partnerships can proceed, particularly for publicly-traded technology companies with ESG disclosure requirements.

Partner with Proven AI Infrastructure Experts

The race to secure adequate power for AI operations will determine winners and losers in the coming decade of technological transformation. Organizations cannot afford to treat energy as an afterthought or delegate these critical decisions to teams without the specialized expertise this moment demands.

What companies are building AI-ready data centers successfully recognize that integrated energy solutions eliminate the bottlenecks constraining traditional approaches. Hanwha Data Centers delivers comprehensive energy solutions specifically designed for hyperscale AI infrastructure. Our integrated approach combines site acquisition, renewable energy development, and power infrastructure delivery to create turnkey solutions.

With gigawatt-scale capacity under development and backing from one of the world’s leading energy companies, we provide the reliability and scalability AI operations require. Contact our team to discuss how we can power your AI infrastructure ambitions.