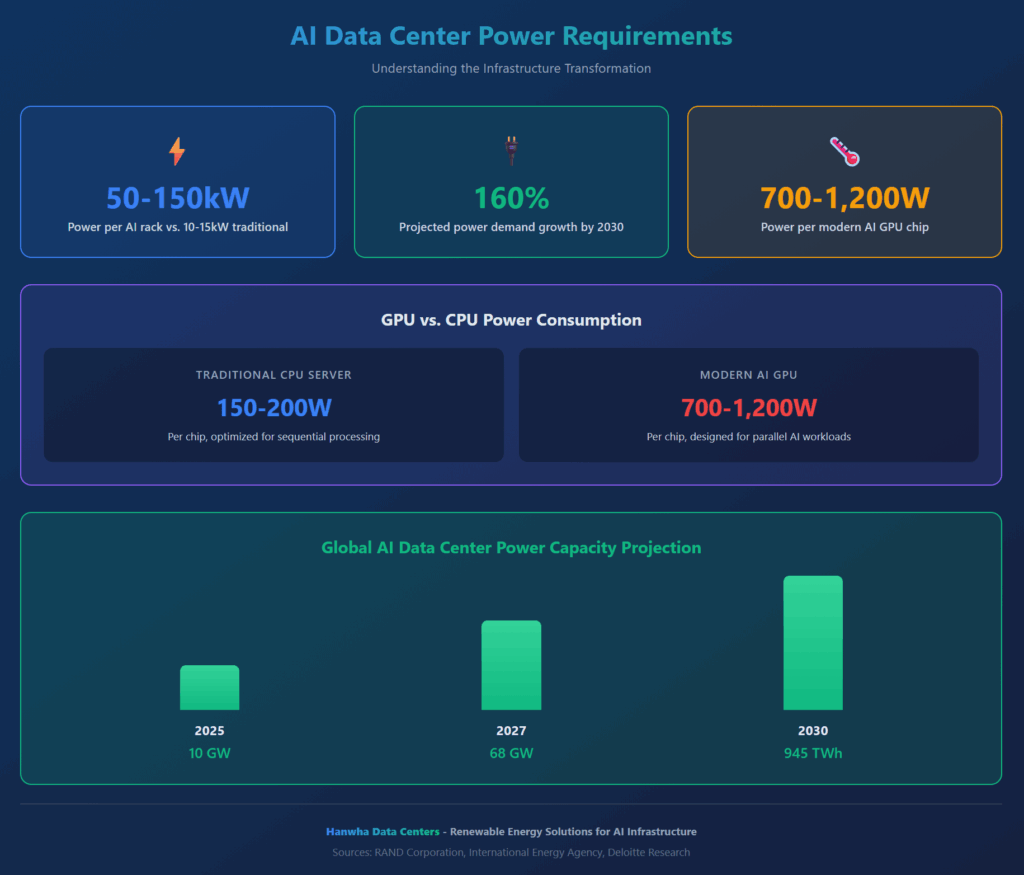

Key Takeaways: Power for AI data centers is driving unprecedented infrastructure transformation, with facilities requiring 50-150 kilowatts per rack compared to traditional 10-15 kilowatts.

- AI data center power demand will surge 160% by 2030, requiring 68 gigawatts of capacity compared to 10 gigawatts in 2025

- Modern GPUs consume 700-1,200 watts per chip while traditional CPUs use 150-200 watts, fundamentally reshaping AI compute energy usage

- Grid interconnection timelines extend 4-8 years, making renewable energy integration essential for rapid deployment

- Strategic infrastructure planning combining multiple energy sources, advanced cooling, and geographic distribution enables sustainable AI growth

Artificial intelligence is fundamentally transforming digital infrastructure. Data center operators and hyperscalers now face the challenge of delivering exponentially more electricity to run massive GPU clusters, train deep learning models, and support real-time inference at unprecedented scale. According to RAND Corporation research, AI data centers could require 68 gigawatts of power capacity globally by 2027, close to California’s entire power grid.

Understanding power for AI data centers has become critical for anyone planning, operating, or investing in next-generation infrastructure. The question extends beyond raw megawatts to reliability, efficiency, sustainability, and speed to market. From renewable integration to energy storage and grid access, AI data centers represent a new era of energy strategy where foresight and resilience determine competitive advantage.

Why Do AI Workloads Require Special Infrastructure?

AI operations demand fundamentally different power characteristics than traditional computing. Where conventional data centers handle cyclical workloads with predictable peaks, AI facilities support continuous, maximum-capacity operations that stress infrastructure in entirely new ways.

Training a single large language model can consume hundreds of megawatt-hours of electricity. Unlike standard data processing tasks, these models rely on dense clusters of GPUs operating simultaneously, sometimes for weeks at a time. This continuous high-performance computing pushes infrastructure to its limits and creates significant heat output, further driving up energy needs through cooling requirements.

The International Energy Agency reports that global electricity consumption for data centers is projected to double to reach around 945 terawatt-hours by 2030, representing just under 3% of total global electricity consumption. From 2024 to 2030, data center electricity consumption grows by around 15% per year, more than four times faster than the growth of total electricity consumption from all other sectors.

AI compute energy usage introduces unique planning challenges. Model training is non-linear and unpredictable in energy consumption, requiring operators to design infrastructure capable of dynamic scaling without compromising grid stability. Training sessions often require synchronization across thousands of GPUs, which amplifies both power draw and the consequences of any interruption.

Understanding High-Density Computing Demands

Traditional CPU-based systems are no longer sufficient for the parallel processing AI requires. Instead, hyperscalers deploy massive GPU clusters in configurations that demand upwards of 80 kilowatts per rack, more than double the density of a conventional data center. These high-density configurations necessitate equally robust power infrastructure to support startup surges, load balancing, and sustained compute over long durations.

The hardware specifications tell a compelling story. Research from Deloitte shows that historically, data centers relied mainly on CPUs running at roughly 150 to 200 watts per chip. GPUs for AI ran at 400 watts until 2022, while 2023 state-of-the-art GPUs for generative AI run at 700 watts, and 2024 next-generation chips are expected to run at 1,200 watts. The average power density is anticipated to increase from 36 kilowatts per server rack in 2023 to 50 kilowatts per rack by 2027.

How Do GPUs vs. CPUs Power Needs Differ?

The shift from CPU-based computing to GPU-accelerated infrastructure represents one of the most significant changes in AI datacenter architecture. Understanding the power differences between these processing units is essential for infrastructure planning.

Central processing units excel at sequential processing, executing instructions one after another with precision. Modern CPUs typically contain multiple cores—anywhere from 8 to 128 in server configurations—allowing some parallel processing. However, their architecture prioritizes versatility and complex instruction handling rather than massive parallelism.

Graphics processing units take a fundamentally different approach. Instead of a few powerful cores, GPUs contain thousands of smaller, specialized cores designed specifically for parallel operations. This architecture makes them exceptionally efficient for the matrix calculations and tensor operations that power artificial intelligence.

The power consumption reflects these architectural differences. While a dual-socket CPU server might draw 600-750 watts during operation, a single AI-optimized GPU can consume 700-1,200 watts. When you consider that a typical AI server rack contains eight GPUs, the total power requirement quickly escalates to 10-12 kilowatts per server, not including networking equipment, storage, and cooling infrastructure.

Energy Efficiency in AI Workloads

Despite higher absolute power consumption, GPUs deliver superior energy efficiency for AI tasks. Research from the National Energy Research Scientific Computing Center measured results across high-performance computing and AI applications, finding that apps accelerated with GPUs saw energy efficiency rise 5x on average compared to CPU-only systems. One weather forecasting application logged gains of nearly 10x.

For AI inference workloads, the efficiency advantages become even more pronounced. GPU-accelerated systems can complete the same AI inference tasks while consuming 3-8x less energy than CPU-only configurations, directly translating to lower operational costs and reduced carbon footprint.

This efficiency matters enormously at scale. Organizations running thousands of servers for AI operations can reduce their total power consumption by megawatts simply by choosing optimized hardware configurations. The energy savings compound over time, affecting everything from utility costs to cooling requirements to carbon emissions.

What Infrastructure Components Drive Power Consumption?

Power for AI data centers encompasses far more than just compute hardware. A comprehensive understanding requires examining the entire infrastructure stack and how each component contributes to total energy requirements.

Computing Hardware and Accelerators

The GPU clusters that power AI workloads represent the largest single power draw in modern AI facilities. A fully populated AI server rack with eight high-performance GPUs, dual CPUs, networking cards, and storage can easily consume 12-15 kilowatts of continuous power. Multiply this across hundreds or thousands of racks, and total IT load quickly reaches tens or hundreds of megawatts.

Advanced AI chips continue pushing power envelopes higher. Next-generation processors are expected to exceed 1,400 watts per chip, requiring infrastructure capable of delivering and dissipating unprecedented power densities.

Cooling Systems and Environmental Control

Cooling accounts for a substantial portion of total data center energy consumption. Traditional air cooling systems, which were adequate for conventional data centers, struggle with AI-level heat densities. As rack power densities exceed 30-40 kilowatts, facilities must deploy advanced cooling technologies.

Liquid cooling solutions, including rear-door heat exchangers and direct-to-chip cooling, offer superior thermal management but require additional infrastructure investment. These systems can reduce cooling energy by 30-40% compared to traditional air cooling while enabling higher rack densities that maximize facility utilization.

Power Usage Effectiveness (PUE) measures total facility power divided by IT equipment power. Traditional data centers average 1.5-1.6 PUE, meaning they consume 50-60% additional power beyond IT loads for cooling and other infrastructure. Leading hyperscale operators achieve PUE as low as 1.1-1.2 through advanced design and operational practices.

Power Distribution and Backup Systems

Electrical distribution within AI data centers requires substantial infrastructure. Medium-voltage distribution systems, transformers, switchgear, and power distribution units all consume space and energy while ensuring reliable power delivery to IT equipment.

Uninterruptible power supply systems and backup generators provide resilience against grid outages. While these systems remain idle most of the time, they represent essential infrastructure for maintaining uptime guarantees. The backup power capacity must match full IT load plus cooling requirements, effectively doubling the total power infrastructure footprint.

Critical Power Planning Strategies

Successfully deploying power for AI data centers requires strategic planning that addresses both immediate needs and future scalability. Several key strategies have emerged as best practices across the industry.

| Strategy | Key Benefit |

| Renewable Energy Integration | 30-50% cost reduction, sustainability compliance |

| Grid Interconnection Planning | Access to multi-gigawatt capacity |

| Geographic Distribution | Optimized power costs and availability |

| Advanced Cooling Systems | 30-40% cooling energy reduction |

| Modular Power Infrastructure | Rapid capacity scaling |

Securing Adequate Power Capacity

The most fundamental challenge facing AI data center development is securing sufficient power capacity. In major markets like Northern Virginia, available grid capacity has become severely constrained, with new facilities facing multi-year wait times for adequate connections.

Strategic site selection prioritizes locations with available transmission infrastructure and utility relationships that can support rapid power delivery. Some operators are exploring sites near major power generation facilities to minimize transmission constraints and reduce interconnection timelines.

Power purchase agreements provide long-term price stability and capacity guarantees. These contracts, typically spanning 10-25 years, allow data center operators to lock in pricing while securing dedicated capacity that won’t be affected by competing demand.

Integrating Renewable Energy Sources

Renewable energy for AI data centers has evolved from an environmental aspiration to a business necessity. Major technology companies have committed to operating on 100% renewable energy, with some already achieving this goal in specific regions.

On-site solar installations, wind power purchase agreements, and renewable energy certificates all contribute to reducing carbon footprint. However, the intermittent nature of renewables requires complementary strategies to ensure 24/7 reliability.

Energy storage systems provide crucial buffering capacity. Battery energy storage systems can absorb excess renewable generation during peak production and discharge during periods of high demand or low renewable output. This technology has become economically viable for utility-scale deployment, with costs declining rapidly over the past decade.

Geographic Distribution and Load Balancing

Distributing AI workloads across multiple geographic regions provides several strategic advantages. Different regions offer varying power costs, renewable energy availability, and grid connection timelines. By strategically placing computing resources, operators can optimize both costs and sustainability.

Time-zone differences enable load shifting strategies. Training workloads that can tolerate some flexibility in scheduling can be distributed to regions where renewable energy is currently abundant or grid conditions are most favorable.

Climate considerations affect cooling requirements significantly. Facilities in cooler climates can leverage free cooling—using outside air for temperature management—reducing cooling energy consumption by 50% or more compared to warmer locations.

What Are the Most Effective Power Optimization Techniques?

Optimizing power consumption in AI data centers requires a multi-faceted approach addressing hardware selection, operational practices, and infrastructure design.

Power Capping and Workload Management

Intelligent power management systems can limit processor consumption to 60-80% of maximum capacity while maintaining acceptable performance. This approach reduces carbon intensity by 80-90% according to research on sustainable AI operations, while extending hardware lifespan and reducing cooling requirements.

Dynamic workload scheduling allocates computing resources based on power availability and cost. During periods of abundant renewable energy or low electricity pricing, operators can increase utilization. When power is constrained or expensive, non-urgent workloads can be throttled or shifted.

Hardware Efficiency Improvements

Selecting the most efficient hardware for specific workloads delivers substantial energy savings. Not all AI tasks require the highest-performance processors. Matching hardware capabilities to workload requirements prevents over-provisioning and reduces unnecessary power consumption.

Next-generation AI processors incorporate power management features at the chip level. Dynamic voltage and frequency scaling allows processors to reduce power consumption during periods of lower utilization without requiring manual intervention.

Infrastructure Optimization

Raising data center operating temperatures from traditional 68-72°F to 80-85°F can reduce cooling energy by 20-40% with no impact on equipment reliability. Modern IT equipment operates reliably at higher temperatures, and many manufacturers now guarantee performance at elevated ambient conditions.

Hot aisle containment and cold aisle containment strategies prevent mixing of hot exhaust air with cool supply air, improving cooling efficiency. These relatively simple infrastructure modifications can improve PUE by 0.1-0.3 points.

Addressing Grid Interconnection Challenges

Grid interconnection represents one of the most significant bottlenecks in AI infrastructure development. Understanding and navigating this challenge is essential for timely facility deployment.

Traditional interconnection processes were designed for distributed loads that gradually ramp up over years. AI data centers operate differently, requiring large blocks of power delivered quickly. This mismatch creates friction with utility planning processes and interconnection studies.

Multi-point grid connections provide redundancy and increase total available capacity. Rather than relying on a single connection point, advanced facilities establish connections to multiple substations or transmission lines. This approach improves reliability while potentially accelerating approval timelines by distributing load across infrastructure.

Utility partnerships that begin early in site selection provide competitive advantages. Working collaboratively with power providers to understand their infrastructure constraints and expansion plans allows operators to align facility development with grid capacity timing.

Building Sustainable AI Infrastructure

Sustainability considerations now drive strategic decisions about power for AI data centers. Regulatory requirements, corporate commitments, and customer expectations all push toward cleaner energy sources and reduced environmental impact.

Carbon-free energy goals require careful planning to ensure claims accurately reflect reality. Matching renewable energy generation with actual consumption on an hourly basis provides more rigorous environmental accounting than annual matching, which can obscure periods of fossil fuel consumption.

Transparency in energy reporting has become increasingly important. Stakeholders expect detailed disclosures about power sources, carbon emissions, and progress toward sustainability goals. Leading operators publish comprehensive sustainability reports with facility-level detail.

Preparing for Future Power Requirements

The trajectory of AI development suggests power requirements will continue increasing substantially. Planning for this growth requires anticipating both technological changes and infrastructure evolution.

Emerging AI architectures may alter power profiles significantly. Sparse models, mixture-of-experts approaches, and other innovations could reduce training costs while maintaining or improving performance. Infrastructure strategies should maintain flexibility to accommodate these evolving requirements.

Investment in grid infrastructure modernization will be essential to support continued AI growth. This includes both transmission capacity expansion and distribution upgrades in key markets. Public-private partnerships may emerge as vehicles for accelerating necessary infrastructure development.

Frequently Asked Questions

How much power does an AI data center consume compared to a traditional data center? AI data centers typically consume 3-5 times more power per square foot than traditional facilities. A single AI server rack requires 50-150 kilowatts compared to 10-15 kilowatts for conventional computing, driven primarily by dense GPU clusters that operate continuously at maximum capacity.

What is the biggest challenge in powering AI data centers? Grid interconnection timelines represent the most significant challenge, often extending 4-8 years in major markets. Securing adequate power capacity has become a critical bottleneck, with utilities struggling to meet the rapid deployment schedules and massive energy requirements that AI infrastructure demands.

Why are GPUs more power-hungry than CPUs? GPUs consume more absolute power because they contain thousands of processing cores operating in parallel, with modern AI chips drawing 700-1,200 watts compared to 150-200 watts for CPUs. However, for AI workloads, GPUs deliver 3-8x better energy efficiency per unit of computation.

How are companies addressing the sustainability concerns of AI power consumption? Leading organizations are integrating renewable energy through on-site solar, wind power purchase agreements, and energy storage systems. Strategic geographic distribution places computing resources in regions with abundant clean energy, while advanced cooling technologies reduce overall power consumption by 30-40%.

What power infrastructure is needed for a 100-megawatt AI facility? A 100-megawatt AI facility requires medium-voltage electrical distribution, multiple grid interconnection points, substantial transformer capacity, uninterruptible power supplies, backup generators, and advanced cooling infrastructure capable of dissipating the equivalent heat output of a small power plant.

Power Your AI Infrastructure with Confidence

The explosive growth of artificial intelligence has fundamentally transformed power requirements for modern data centers. Successfully navigating this landscape requires comprehensive strategies addressing capacity, reliability, sustainability, and scalability. From understanding the differences between GPUs vs. CPUs power needs to implementing advanced cooling and renewable integration, every decision impacts operational success.

Organizations that prioritize strategic energy planning will gain competitive advantages in speed to market, operational efficiency, and sustainability performance. The infrastructure choices made today will determine which companies can capitalize on AI opportunities over the coming decade. Hanwha Data Centers delivers the renewable energy solutions and infrastructure expertise needed to power next-generation AI operations. Contact us today to discuss how we can support your AI infrastructure requirements with reliable, sustainable power solutions.