Key Takeaways:

Edge data centers for AI are becoming essential infrastructure as real-time applications demand sub-10 millisecond response times that centralized facilities cannot deliver.

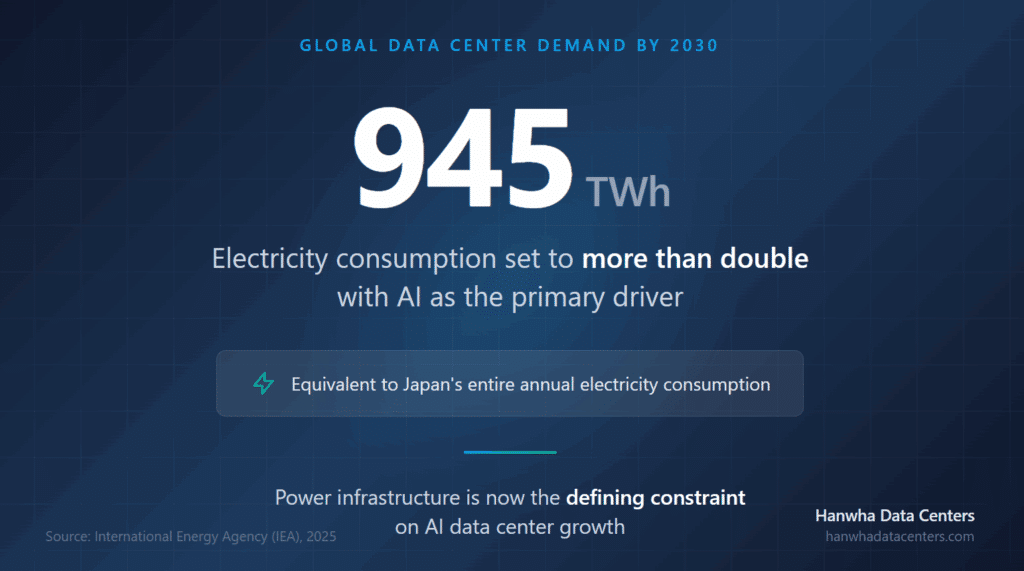

- Global data center electricity consumption will double to 945 TWh by 2030, with AI as the primary driver according to the International Energy Agency

- Power availability has replaced location convenience as the primary factor in edge site selection, with grid constraints reshaping deployment strategies

- Regional edge facilities operating at 5-10 MW provide the optimal balance between economic viability and latency reduction for distributed AI

- Organizations integrating edge strategies with comprehensive energy infrastructure will capture significant competitive advantages

Infrastructure leaders should evaluate edge computing as a strategic complement to centralized facilities, prioritizing power acquisition and rapid deployment capabilities.

The explosive growth of artificial intelligence is creating an infrastructure paradox. While massive training facilities dominate headlines, the competitive battleground is shifting toward distributed computing architectures that deliver AI-powered services at the speed users demand. Edge data centers for AI represent a fundamental rethinking of where computation happens and how power reaches those locations.

According to the International Energy Agency, global data center electricity consumption is set to more than double to around 945 TWh by 2030, roughly equivalent to Japan’s entire annual electricity usage. AI is the most important driver of this growth. Understanding how edge facilities fit into this evolving landscape has become essential for anyone planning next-generation digital infrastructure.

What Are Edge Data Centers for AI and Why Do They Matter?

Edge data centers position computing resources closer to where data is generated and consumed rather than routing everything through distant centralized facilities. For AI applications, this geographic distribution addresses a critical challenge: the physical limits of network latency.

The distinction between AI training and AI inference clarifies why edge matters. Training large language models is computationally intensive but tolerant of latency because it happens in batches over extended periods. Inference, which runs trained models to deliver real-time predictions, demands millisecond-level responsiveness. A self-driving vehicle cannot wait for a round-trip to a data center hundreds of miles away.

Industry analysts note that edge facilities can deliver sub-10 millisecond latency to most users, while centralized cloud facilities often cannot match this performance due to physical distance constraints. This latency gap becomes non-negotiable for applications requiring instantaneous responses.

How Edge Facilities Differ from Hyperscale Data Centers

The contrast extends beyond simple size differences. Hyperscale campuses optimize for training workloads requiring massive parallel processing across thousands of GPUs. These facilities can scale to gigawatt-level power consumption.

Edge facilities typically operate in smaller power ranges and prioritize different performance characteristics. They must function reliably in diverse environmental conditions while maintaining connectivity standards comparable to larger facilities. The IEA reports that data centers in advanced economies will drive more than 20% of electricity demand growth through 2030, creating opportunities across both hyperscale and edge segments.

| Characteristic | Hyperscale Data Centers | Edge Data Centers for AI |

| Typical Power Capacity | 50-500+ MW | 1-10 MW |

| Primary Workload | AI Training, Large-scale Computing | AI Inference, Real-time Analytics |

| Latency Requirements | Seconds to Minutes Acceptable | Milliseconds Required |

| Geographic Strategy | Centralized in Power-rich Regions | Distributed Near Users/Data Sources |

| Deployment Model | Custom-built Facilities | Often Modular/Prefabricated |

Why Is Power Infrastructure the Critical Constraint for Edge AI?

The primary bottleneck for edge data center expansion is power accessibility. This constraint has fundamentally reshaped how edge facilities are planned and deployed.

The IEA warns that unless significant investments are made into transmission infrastructure, approximately 20% of planned data center projects could face delays connecting to the grid. Traditional data center hubs have reached capacity limits, with utilities implementing constraints that complicate development approaches.

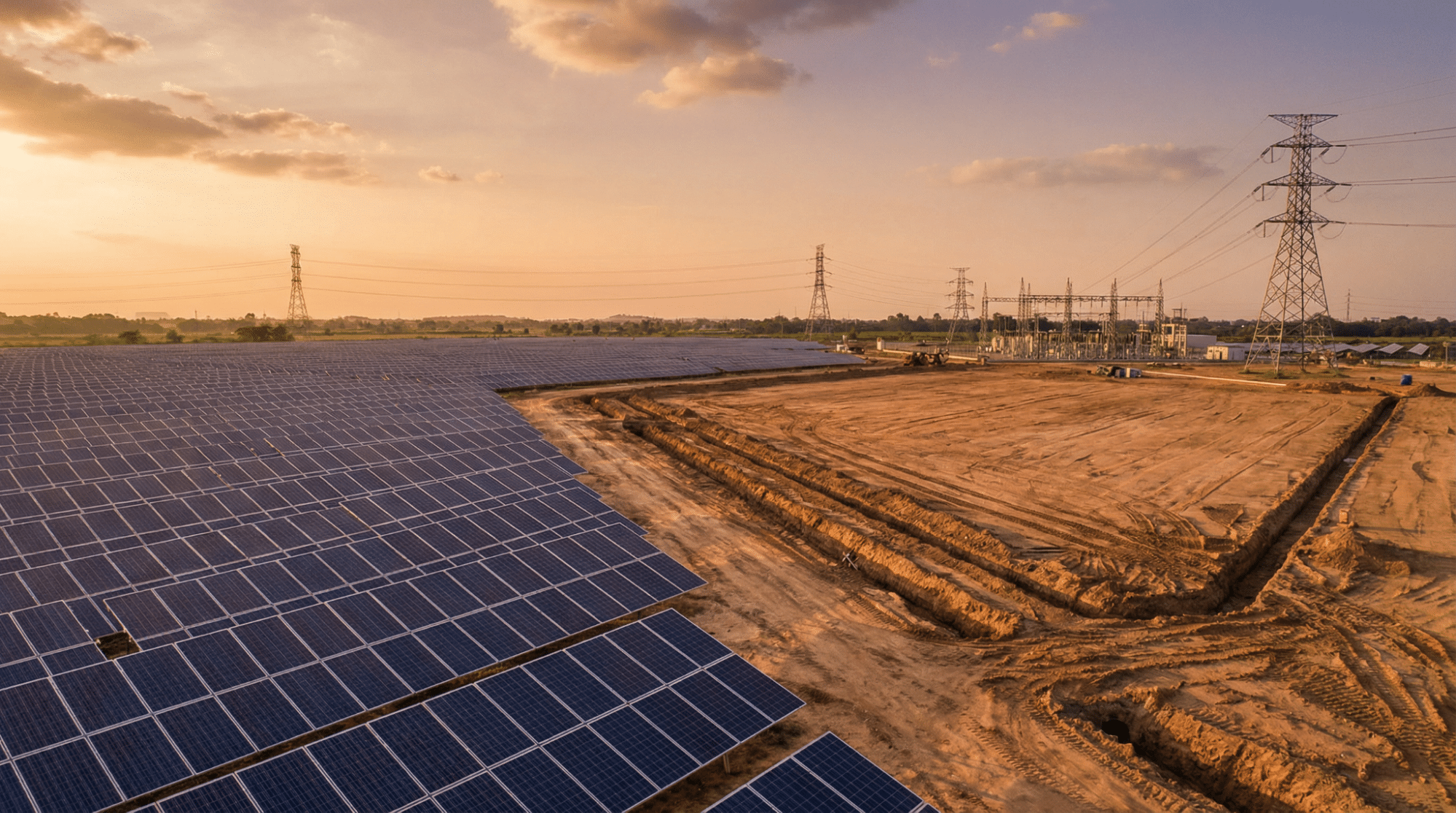

This power scarcity forces a strategic pivot. Rather than building where infrastructure already exists, edge deployment increasingly follows available power. States with abundant renewable resources and available grid capacity attract unprecedented investment as operators prioritize power acquisition over geographic convenience.

How Edge Facilities Address Grid Limitations

Edge data centers for AI offer structural advantages in navigating power constraints. Their smaller footprints make them easier to accommodate on existing grid infrastructure without triggering expensive transmission upgrades. Many utility systems can support edge-scale loads through existing substations and distribution networks.

The modular nature of edge facilities enables behind-the-meter solutions that bypass grid constraints. On-site renewable generation paired with battery storage can provide primary power while maintaining grid connections for backup. This approach transforms edge sites from grid consumers into potentially grid-supportive assets, aligning with trends toward renewable energy integration for data centers.

What Power Requirements Define Edge Data Centers for AI?

AI workloads demand fundamentally different power characteristics than traditional enterprise computing. Understanding these requirements is essential for planning edge infrastructure supporting real-world AI applications.

The IEA notes that AI-optimized servers, which rely heavily on GPUs and accelerators, account for nearly half of the net increase in global data center electricity consumption through 2030. Even at the edge, AI inference requires substantial power density compared to conventional computing, though less extreme than training clusters.

The operational profile also differs significantly. AI inference tends toward sustained, predictable loads rather than variable patterns typical of general enterprise computing. This consistency simplifies some aspects of power planning while intensifying others.

Essential Power Infrastructure Components

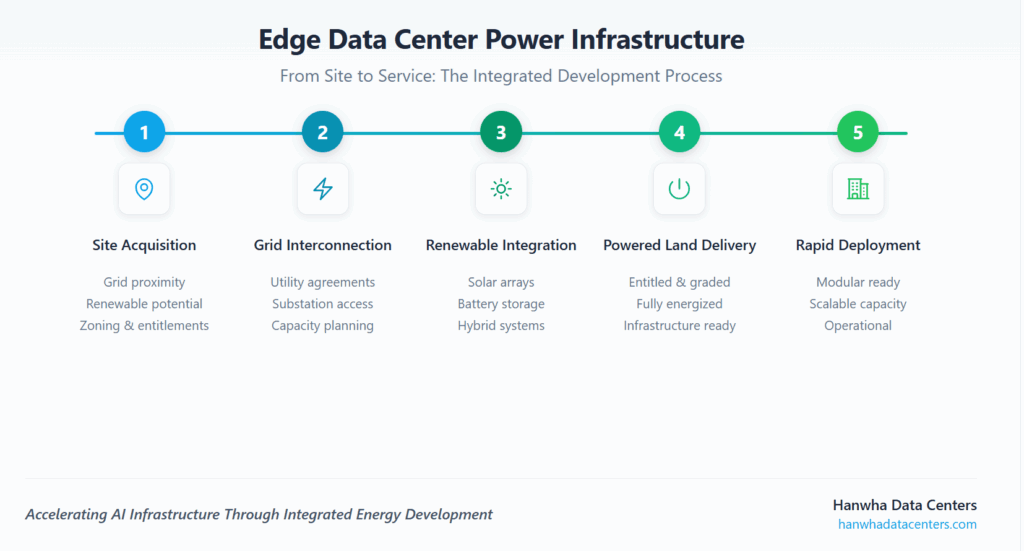

Successful edge deployment requires integrated attention to several infrastructure elements:

- Grid interconnection remains foundational even for facilities with on-site generation, requiring clear capacity commitments from utility providers

- On-site generation increasingly serves as primary power, with solar installations providing significant daily energy requirements in favorable locations

- Energy storage bridges gaps between variable generation and continuous AI operation while enabling participation in grid services

The combination of generation, storage, and grid connection creates resilient power ecosystems appropriate for mission-critical edge workloads. Organizations partnering with energy campus developers can accelerate deployment by integrating these components from project inception.

Where Should Edge Data Centers for AI Be Located?

Site selection for edge facilities balances competing priorities that differ substantially from traditional data center development. The optimal locations combine power availability, network connectivity, and proximity to served populations.

Power-first strategies dominate current edge planning. Available grid capacity, proximity to existing substations, and renewable generation potential now outweigh factors like tax incentives that traditionally influenced site selection. Markets with abundant clean energy resources attract edge investment even when they lack established technology clusters.

Network topology influences edge placement for latency-sensitive applications. Effective deployment often targets major network exchange points or follows fiber backbone routes minimizing hops between edge facilities and central infrastructure.

| Site Selection Factor | Traditional Approach | AI-Focused Edge Approach |

| Primary Driver | Tax Incentives, Labor Costs | Power Availability, Grid Capacity |

| Secondary Factors | Proximity to Tech Clusters | Renewable Energy Potential |

| Infrastructure Focus | Existing Connectivity | Integrated Energy Development |

| Timeline Priority | Cost Optimization | Speed to Deployment |

How Can Organizations Deploy Edge Infrastructure Rapidly?

Speed to deployment has become a critical differentiator as organizations race to capture AI opportunities. Traditional construction timelines are increasingly incompatible with market dynamics rewarding rapid deployment.

Prefabricated modular solutions have emerged as the dominant approach for accelerated edge deployment. Factory-built units containing complete power, connectivity, and environmental systems deploy faster than stick-built alternatives while achieving comparable reliability. The standardization inherent in modular approaches also reduces cost and simplifies operations across distributed networks.

Energy campus models that integrate site preparation, power infrastructure, and connectivity development offer another acceleration path. Rather than treating power as a utility service to request, this approach treats energy infrastructure as part of the development scope. Organizations partnering with integrated digital infrastructure providers compress timelines by parallelizing traditionally sequential work.

Deployment Acceleration Factors

Organizations pursuing rapid edge deployment should prioritize:

- Pre-positioned land with existing utility easements and favorable zoning

- Established relationships with utilities in target markets

- Standardized designs with regulatory pre-approval in relevant jurisdictions

- Supply chain commitments for critical equipment with extended lead times

- Operational frameworks enabling remote management of distributed facilities

What Role Does Edge Computing Play in the Future of Data Centers?

The relationship between edge and centralized infrastructure is complementary rather than competitive. Effective AI infrastructure increasingly relies on hybrid architectures positioning workloads optimally across distributed resources.

Training workloads will remain concentrated in massive centralized facilities where power density and parallel processing capabilities justify investment. Industry analysts project that most AI model training will continue in cloud-scale data centers marshaling the computational resources these workloads demand.

Inference workloads, however, are migrating toward the edge as AI applications become embedded in real-time systems. This shift has profound implications for how organizations plan and invest in digital infrastructure.

Emerging Trends Shaping Edge AI Infrastructure

Several trends accelerate the shift toward distributed AI infrastructure:

Agentic AI applications operating autonomously require consistent low-latency access to inference capabilities. As these systems become prevalent, edge infrastructure becomes essential rather than optional.

Regulatory requirements around data sovereignty push processing closer to data sources. Edge facilities processing data locally before transmitting aggregated results help organizations comply with geographic restrictions.

Bandwidth economics continue favoring local processing for high-volume data streams. Video analytics, sensor fusion, and real-time monitoring generate data volumes impractical to transmit to centralized facilities.

Frequently Asked Questions

What is the difference between edge computing and edge data centers?

Edge computing refers to the processing paradigm of handling data near its source rather than in centralized facilities. Edge data centers are the physical infrastructure enabling edge computing at scale, providing the power, connectivity, and reliability required for enterprise and AI workloads exceeding what distributed devices can handle independently.

How much power does an edge data center typically require?

Edge data centers for AI typically operate in the 1-10 MW range, with regional facilities averaging 5-10 MW. This is substantially smaller than hyperscale facilities exceeding 100 MW. AI workloads within edge facilities demand higher power density per rack than traditional enterprise computing.

Can edge data centers operate on renewable energy?

Yes, edge data centers can achieve high renewable energy utilization through combinations of on-site generation and grid-sourced clean power. Facilities in favorable locations can derive significant energy from on-site solar with battery storage, with grid connections providing reliability. This approach often enables faster deployment by reducing dependence on utility interconnection timelines.

What industries benefit most from edge data centers for AI?

Industries requiring real-time AI inference see the greatest benefits. This includes autonomous vehicles and transportation, manufacturing with AI-driven quality control, healthcare with real-time diagnostics, retail with computer vision, and telecommunications deploying AI at the network edge.

Build Your Edge AI Infrastructure Strategy Today

The convergence of AI demand, power constraints, and latency requirements creates unprecedented opportunities for organizations approaching infrastructure strategically. Edge data centers for AI are not simply smaller versions of traditional facilities but a distinct infrastructure category with unique requirements.

Success requires partners understanding both energy infrastructure challenges and specific AI workload demands. The ability to acquire suitable sites, develop power infrastructure rapidly, and integrate renewable generation distinguishes capable infrastructure developers from those offering undeveloped parcels.

Hanwha Data Centers brings deep expertise in energy campus development, renewable integration, and the infrastructure foundations that enable rapid deployment at scale. With backing from a Fortune Global 500 company and leadership drawn from hyperscale operators, Hanwha delivers powered land solutions meeting demanding AI requirements. Connect with Hanwha Data Centers to explore comprehensive development solutions.