Key Takeaways

AI adoption is accelerating faster than energy infrastructure can keep pace, making power availability the single biggest constraint for enterprises planning AI deployments.

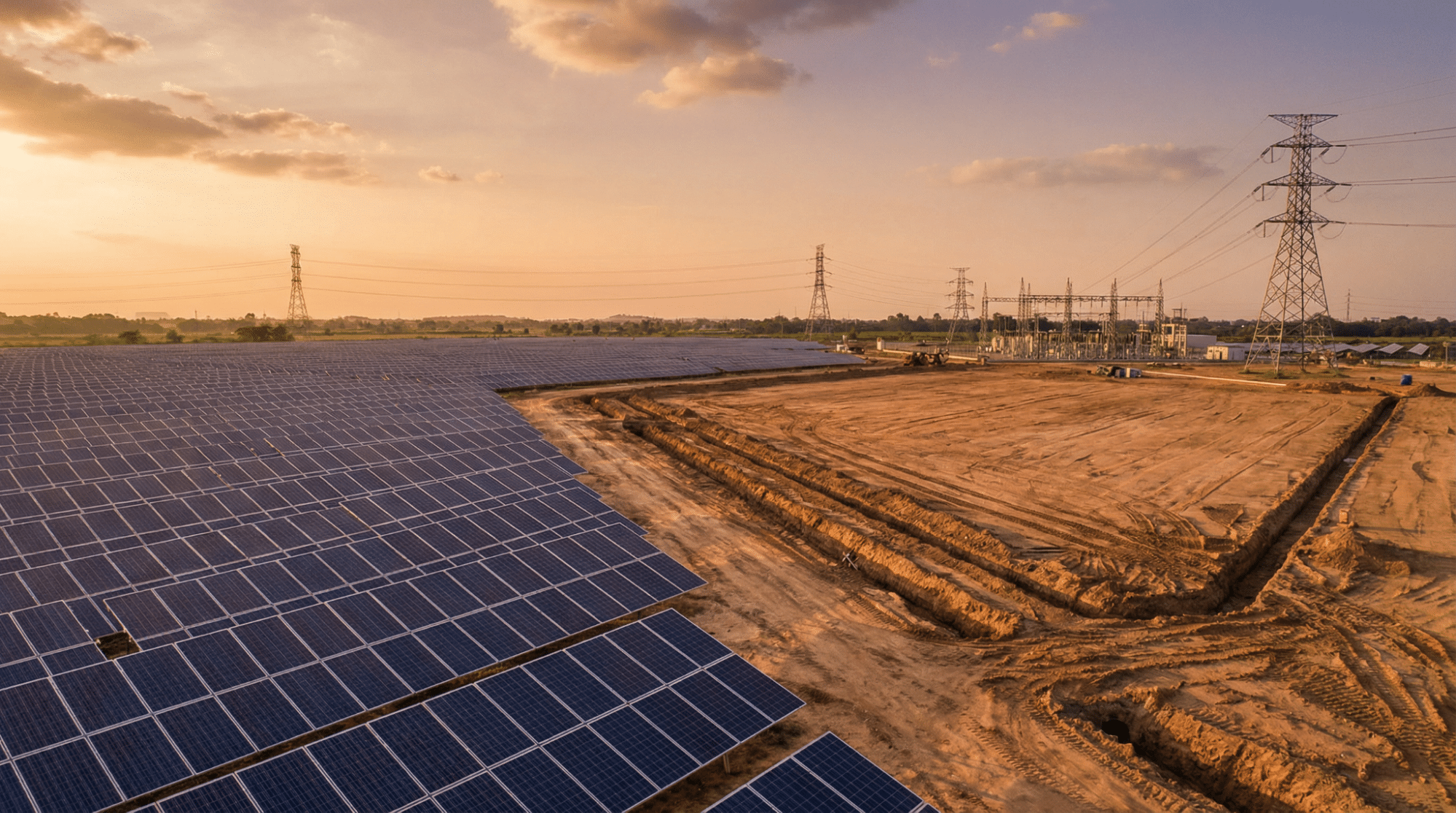

- Global data center power demand is projected to grow 165% by 2030, with AI workloads driving the majority of this surge

- Grid interconnection delays now stretch to five years or longer in high-demand markets, forcing organizations to rethink traditional utility-dependent approaches

- Enterprises that integrate energy planning into their AI strategy from day one will gain decisive competitive advantages over those scrambling to secure power later

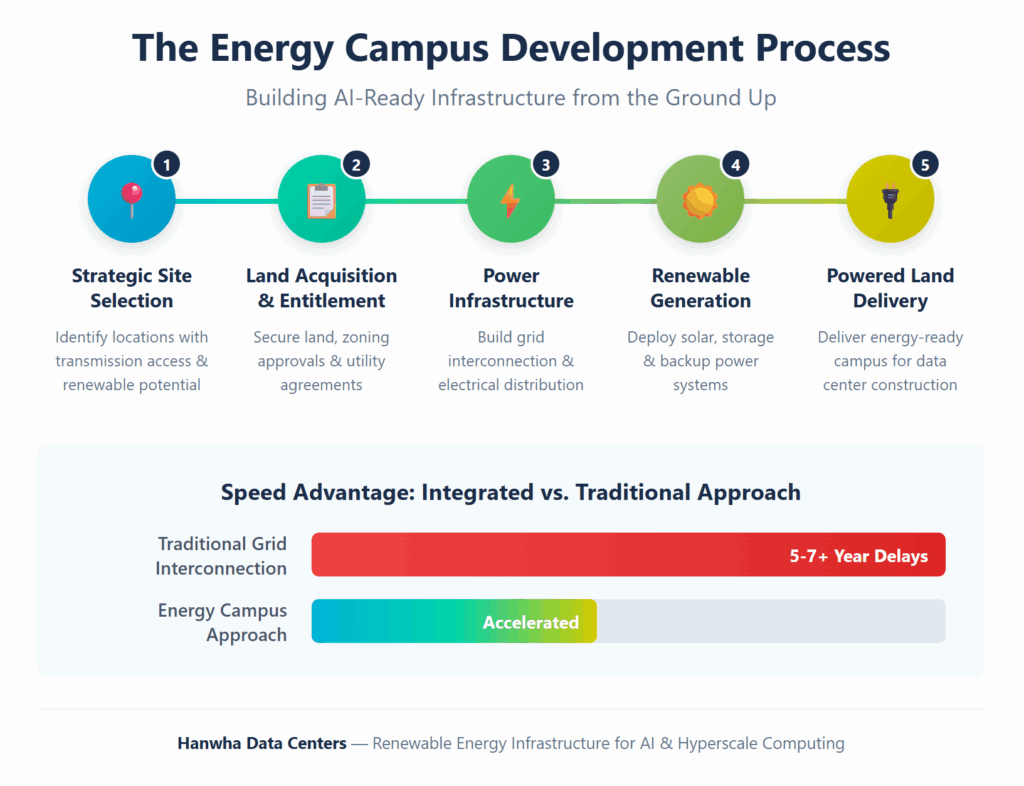

- Energy campus developments co-locating power generation with compute infrastructure represent the fastest path to bringing AI capabilities online

Organizations waiting for the grid to catch up will find themselves locked out of the AI race entirely.

The artificial intelligence boom has fundamentally reshaped how enterprises must think about digital infrastructure. While much of the conversation focuses on compute power and model capabilities, a more pressing constraint is emerging: energy. Organizations that fail to address their energy infrastructure needs now will face crippling bottlenecks as they attempt to scale AI operations through 2026 and beyond.

The numbers paint a stark picture. According to Goldman Sachs Research, global power demand from data centers will increase 50% by 2027 and as much as 165% by the end of the decade compared to 2023 levels. The International Energy Agency projects global data center electricity consumption will reach approximately 945 terawatt-hours by 2030, representing nearly 3% of total worldwide consumption. This isn’t gradual growth. It’s an infrastructure emergency unfolding in real time.

Why Does AI Change Energy Infrastructure Needs?

AI workloads consume dramatically more power than traditional computing tasks. Where conventional data centers handle cyclical workloads with predictable peaks and valleys, AI operations demand continuous, maximum-capacity performance that stress infrastructure in entirely new ways.

How Much More Power Does AI Actually Require?

Training large language models consumes hundreds of megawatt-hours of electricity. Modern AI training requires massive GPU clusters running simultaneously for weeks at a time. Unlike standard data processing, these workloads cannot be paused or shifted without significant cost and schedule impact.

The hardware tells the story clearly. According to the Congressional Research Service, modern data center-level GPUs have thermal design power ratings between 350W and 700W per chip, compared to 150W-350W for standard CPUs. When organizations deploy thousands of these processors in a single facility, the energy demands multiply exponentially.

| Infrastructure Type | Typical Power Density | Power Requirement |

| Traditional Data Center | 10-15 kW per rack | 30 MW facility average |

| AI-Optimized Facility | 80-150 kW per rack | 200+ MW facility average |

| Next-Gen AI Training | 150-200+ kW per rack | 500+ MW campus scale |

Legacy data centers were never designed for this kind of load. Organizations attempting to retrofit existing facilities often find themselves constrained by electrical distribution systems and utility interconnection limits that cannot be easily upgraded.

What Makes Grid Constraints the Primary Bottleneck?

The traditional electrical grid was built for an era of gradually increasing residential and commercial demand, not the explosive growth driven by AI. Utilities across key markets are struggling to keep pace, creating bottlenecks that threaten AI expansion timelines.

In Northern Virginia, often called the data capital of the world, new facilities face extended wait times for adequate grid connections. Some utilities require complete transmission infrastructure upgrades before supporting additional large-scale operations. The Deloitte research indicates that interconnection delays can exceed five to seven years in some markets, making traditional grid-dependent approaches increasingly unviable.

Similar constraints exist across other major data center markets. Dallas, Phoenix, Columbus, and emerging hubs in Texas and the Southeast are all experiencing unprecedented demand that pushes local utilities to their limits. Supply chain disruptions for critical components like transformers and switchgear create additional delays beyond historical norms.

What Are the Core Pillars of AI-Ready Energy Infrastructure?

Building infrastructure capable of supporting AI through 2026 and beyond requires addressing multiple interconnected challenges simultaneously. Organizations cannot solve this puzzle by focusing on any single element in isolation.

Higher Power Availability and Density

The most immediate requirement is securing enough raw capacity to support dense compute loads. AI facilities require power availability measured in hundreds of megawatts, not tens. Site selection must prioritize access to substantial electrical capacity from day one, with clear pathways for scaling as operations expand.

Power density presents equally significant challenges. Traditional data centers allocate 10-15 kilowatts per rack. AI workloads demand 80-150 kilowatts or more per rack. This increased density requires robust electrical distribution systems, medium-voltage distribution, advanced switchgear, and power distribution units designed specifically for high-density environments.

Scalable Architecture for Future Growth

AI models are growing more complex with each generation. Infrastructure built for today’s requirements will likely prove inadequate within 18-24 months. Organizations need modular, scalable designs that can accommodate growth without requiring complete facility rebuilds.

This scalability extends beyond simple capacity additions. Next-generation AI chips promise improved efficiency but may require different power delivery characteristics. Facilities must be designed with flexibility to adapt as hardware evolves, incorporating standards-based infrastructure that can accommodate emerging technologies.

Strategic Site Selection and Power Planning

Visibility into power availability and grid capacity informs optimal location decisions. The most successful AI deployments start with comprehensive site selection that evaluates transmission infrastructure access, utility relationships, renewable resource availability, and regulatory environments.

Organizations that treat site selection as simply a real estate decision miss critical factors that determine long-term success. The difference between a site with strong grid interconnection potential and one facing years of utility delays can mean the difference between market leadership and competitive obsolescence.

How Does Renewable Energy Enable Faster Deployment?

The renewable energy conversation has shifted dramatically. What was once primarily an environmental consideration has become a strategic imperative for organizations seeking reliable, scalable power for AI data centers.

Why Are Renewables Becoming a Speed Advantage?

Grid constraints make traditional utility connections increasingly unreliable as deployment pathways. Organizations that integrate renewable generation into their infrastructure strategy can bypass many of the bottlenecks plaguing conventional development approaches.

Co-locating power generation with compute infrastructure eliminates many transmission limitations. Solar arrays, wind installations, and battery storage systems can be deployed alongside data center facilities, providing dedicated capacity that doesn’t depend on utility expansion timelines. This approach cuts delivery times while reducing strain on overloaded power grids.

Major technology companies have recognized this shift. According to S&P Global, the technology sector accounted for more than 68% of tracked corporate renewable energy deals in the 12 months ending February 2024. These organizations aren’t pursuing renewables simply for sustainability compliance. They’re doing it because it’s often the fastest path to bringing new AI capacity online.

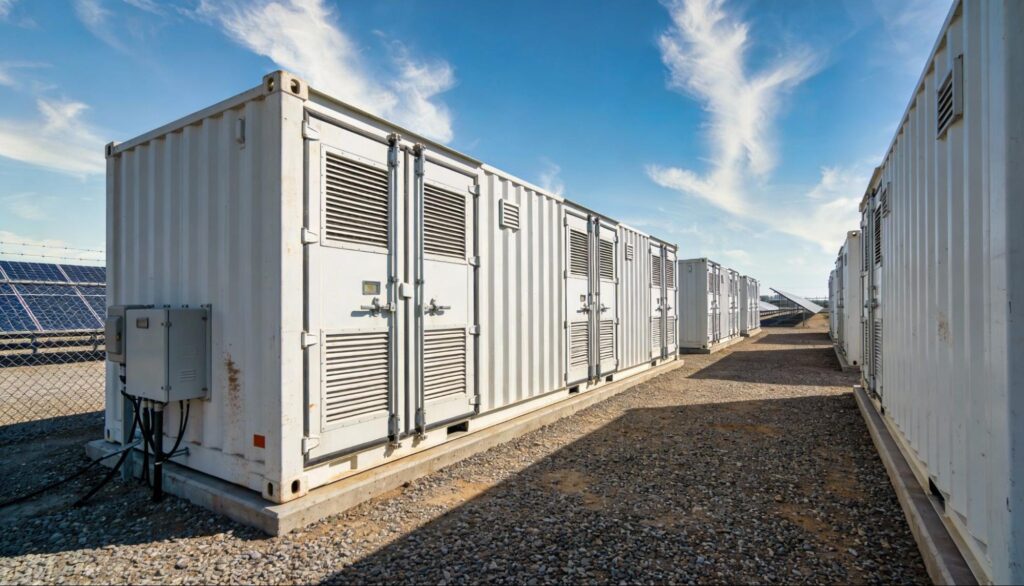

What Role Does Energy Storage Play?

Battery energy storage systems bridge the gap between intermittent renewable generation and continuous AI power demands. Modern lithium-ion installations store excess solar energy during peak production hours and discharge during evening periods or lower generation conditions.

Beyond simple backup, storage systems provide grid services such as frequency regulation and voltage support. These capabilities create additional revenue streams while supporting renewable integration. Some organizations are exploring hydrogen storage as longer-duration backup options that can support extended operations without emissions.

| Energy Integration Strategy | Key Benefit | Deployment Consideration |

| On-site Solar Generation | Direct power during daylight hours | Requires substantial land footprint |

| Battery Storage Systems | Extends renewable availability | Scales with capacity needs |

| Hybrid Wind + Solar | Complementary generation profiles | Reduces intermittency concerns |

| Green Hydrogen | Long-duration backup | Emerging technology, higher cost |

Five Essential Elements for AI-Ready Infrastructure

Organizations preparing for 2026 AI requirements should prioritize these foundational elements:

- Strategic site selection prioritizing power access – Location decisions must start with power availability rather than treating it as an afterthought. Sites with proximity to existing transmission infrastructure, favorable regulatory environments, and access to renewable resources provide the fastest deployment pathways.

- Integrated power generation capabilities – Facilities that combine compute infrastructure with dedicated power generation avoid the interconnection delays plaguing conventional developments. This integration requires partnerships with energy campus developers rather than traditional utility relationships.

- Robust electrical distribution designed for AI workloads – Power distribution systems must accommodate the high-density requirements of AI computing. Medium-voltage distribution, advanced switchgear, and intelligent load management create the foundation for reliable operations.

- Flexible infrastructure accommodating hardware evolution – Power delivery systems should anticipate increasing density requirements as AI chips evolve. Modular designs and standards-based infrastructure create adaptability for future demands.

- Comprehensive utility and transmission planning – Understanding grid capacity constraints, interconnection timelines, and utility relationships informs realistic deployment schedules and identifies potential bottlenecks before they become project-stopping obstacles.

What Does Resilient Infrastructure Design Look Like?

Building for resilience means anticipating failure scenarios and designing systems that maintain operations despite disruptions. AI workloads cannot tolerate interruptions, making resilience planning essential for any serious deployment.

Redundancy Beyond Traditional Approaches

Traditional N+1 redundancy may prove insufficient for AI operations where even momentary power fluctuations can corrupt training runs. Leading facilities implement 2N or greater redundancy for critical systems, with automatic failover capabilities that maintain operations seamlessly during any single-point failure.

Microgrid capabilities allow facilities to operate independently from the main electrical grid during emergencies or maintenance periods. These systems combine on-site generation, energy storage, and intelligent control systems to create self-sufficient power networks. During normal operations, microgrids provide grid services while reducing dependence on utility power.

Geographic Distribution Strategies

Distributing AI infrastructure across multiple locations provides resilience against regional grid issues, natural disasters, and capacity constraints. Organizations are increasingly deploying workloads across geographically diverse facilities, optimizing placement based on power availability and renewable energy access.

Edge deployment strategies position AI computing closer to end users while distributing power requirements across multiple smaller facilities. Edge installations typically operate at 1-10 MW scale, making them easier to power using local resources compared to hyperscale facilities requiring 100+ MW.

Frequently Asked Questions

How much power does a typical AI data center require compared to traditional facilities?

Traditional enterprise data centers typically operate with total facility power in the 30-50 MW range, while AI-optimized facilities commonly require 200+ MW. Individual rack power densities illustrate the difference even more dramatically: conventional servers require 10-15 kW per rack, while AI GPU clusters demand 80-150 kW or more per rack.

What causes the extended delays in grid interconnection for new data centers?

Grid interconnection delays stem from multiple factors including overwhelmed utility capacity, supply chain constraints for transformers and switchgear, lengthy permitting and environmental review processes, and the need for transmission infrastructure upgrades. In high-demand markets, these factors combine to create delays stretching five to seven years for new connections.

Can existing data centers be upgraded to support AI workloads?

Many existing facilities face fundamental limitations in electrical distribution systems and structural design that make significant AI upgrades impractical. While some retrofitting is possible, organizations often find that purpose-built AI infrastructure delivers better performance, lower operating costs, and faster deployment compared to extensive renovation of legacy facilities.

Building for 2026 and Beyond

The organizations that succeed in AI will be those that treat energy infrastructure as a strategic priority rather than an operational afterthought. Designing for 2026 requirements means building with substantially more capacity and flexibility than current workloads demand.

Planning horizons must extend beyond immediate needs. Facilities designed today should anticipate the AI landscape three to five years out, incorporating headroom for growth that would otherwise require costly retrofits or relocations. The cost of over-provisioning infrastructure pales compared to the opportunity cost of being unable to scale when competitive pressures demand it.

The competitive dynamics are clear. Organizations that secure reliable, scalable power infrastructure now will capture advantages that compound over time. Those that delay risk being permanently disadvantaged as available power capacity becomes increasingly scarce and expensive in key markets.

For enterprises serious about AI success, the path forward runs through comprehensive energy infrastructure planning. Hanwha Data Centers specializes in developing energy campuses that integrate renewable generation, strategic site selection, and scalable power infrastructure from the ground up. Contact our team to explore how purpose-built energy infrastructure can accelerate your AI roadmap.