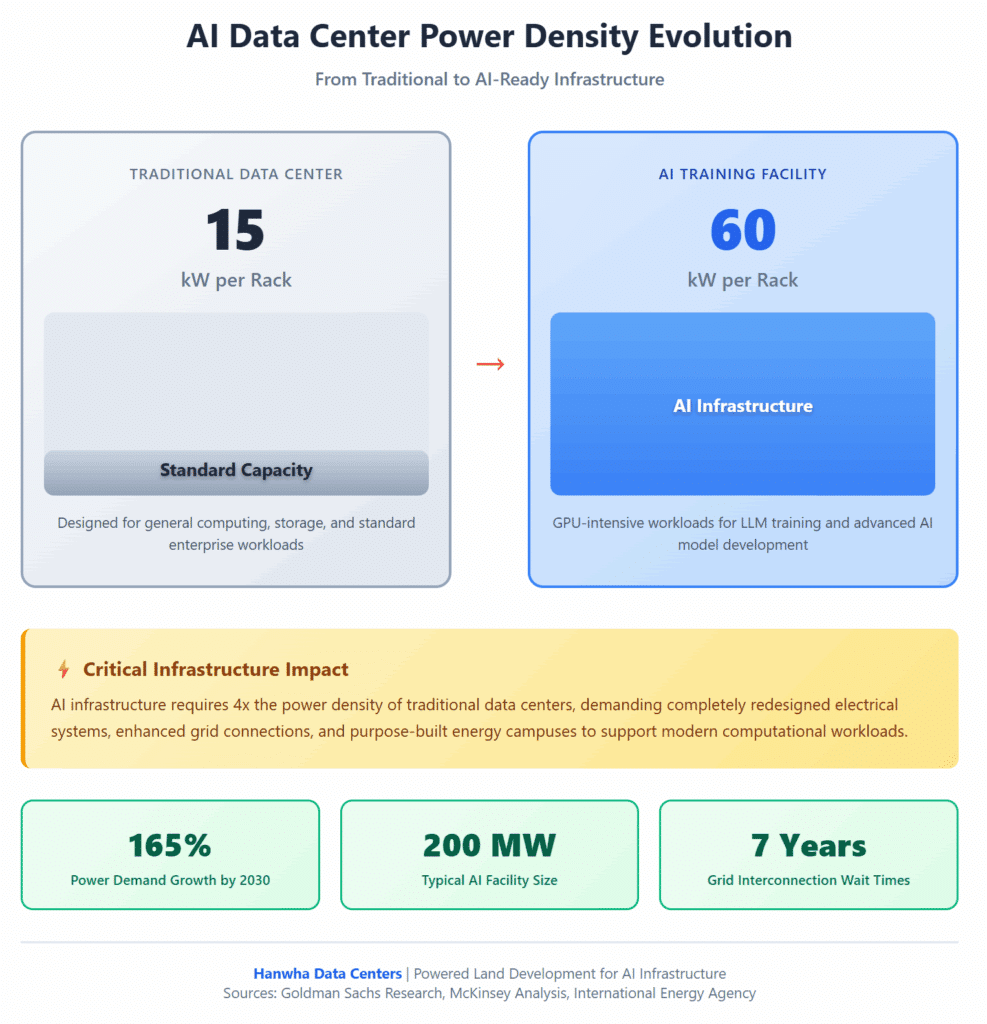

The explosive growth of artificial intelligence demands a fundamental rethinking of data center infrastructure. Global power demand from data centers will increase 165% by 2030 compared with 2023, driven almost entirely by AI workloads. Traditional facilities designed for 6-15kW per rack simply cannot handle the 20-50kW+ power densities that modern AI data center infrastructure requires. The question isn’t whether your organization needs to prepare for AI workloads—it’s whether your energy infrastructure can actually support them.

Key Takeaways

AI data center infrastructure requires fundamentally different power planning than traditional computing environments.

- Power availability has become the primary bottleneck to AI deployment, with interconnection wait times reaching seven years in major markets like Northern Virginia

- Energy density requirements have tripled from traditional data center standards, demanding robust electrical infrastructure and grid connections

- Strategic site selection based on power access determines project viability more than proximity to fiber or traditional location factors

- Renewable energy integration and grid modernization are critical for long-term sustainability and cost predictability

The winners in AI infrastructure will be enterprises that secure reliable power sources now, not those waiting for traditional grid upgrades that may take a decade.

What Makes AI Data Center Infrastructure Different from Traditional Facilities?

AI workloads fundamentally change infrastructure requirements in ways that catch most organizations off guard. Ten years ago, a 30-megawatt facility was considered large, but today a 200-MW facility is considered normal. This dramatic shift stems from the computational demands of training and running large language models.

Traditional data centers were built around central processing units with predictable power consumption patterns. Modern AI data center infrastructure centers on graphics processing units that draw substantially more power per rack while generating significantly more heat.

The shift from traditional computing to AI workloads represents the most significant infrastructure challenge the data center industry has faced in decades, requiring fundamental rethinking of energy delivery systems.

Why Traditional Infrastructure Planning Falls Short

Most enterprise infrastructure planning assumes steady, predictable growth curves. AI adoption follows a different pattern entirely. Companies launching AI initiatives often discover their power requirements will double or triple within 18 months, forcing rapid reassessment of existing capacity.

The physical infrastructure supporting AI operations requires different thinking about everything from site selection to energy sourcing. Organizations that treat AI as simply “more computing” will find themselves constrained by decisions made years ago when power availability seemed abundant.

How Do Power Requirements Impact AI Infrastructure Planning?

Power availability has emerged as the single most critical factor in AI data center development. The challenge goes far beyond simply having enough electricity on paper—it involves securing reliable grid connections, navigating complex utility relationships, and planning for power delivery that may take years to materialize.

ERCOT saw a 700% spike in large load requests from 2023 to 2024, overwhelming utility planning systems designed for gradual growth. This bottleneck affects every region pursuing AI leadership.

Understanding Real Power Density Needs

AI infrastructure demands power density levels that would have seemed excessive just five years ago. Training a single large language model can require power consumption equivalent to a small town’s electrical needs for weeks or months.

| Infrastructure Type | Power per Rack | Typical Facility Size | Primary Use Case |

| Traditional Data Center | 6-15 kW | 10-50 MW | General computing, storage |

| High-Performance Computing | 20-35 kW | 50-100 MW | Scientific computing, analytics |

| AI Training Facility | 40-60 kW | 100-300 MW | LLM training, model development |

| AI Inference Operations | 25-40 kW | 50-200 MW | Production AI applications |

Enterprise AI readiness begins with honest assessment of current power infrastructure capabilities. Most existing facilities lack the electrical distribution systems needed to support modern AI workloads without major retrofits.

Grid Interconnection Challenges You Must Navigate

The path from planning an AI facility to actually receiving power has become significantly more complex. The International Energy Agency finds that approximately 20% of planned data center projects risk significant delays, with interconnection wait times as long as a decade in key regions.

Northern Virginia, the world’s largest data center market, exemplifies these challenges. The region already dedicates 25% of its electricity supply to data centers, yet demand continues accelerating. Organizations entering interconnection queues today face average wait times of seven years.

Understanding the interconnection process requires engaging with utilities early and preparing for lengthy approval cycles. The technical complexity of connecting high-load facilities means even straightforward projects face scrutiny that can extend timelines by years.

What Site Selection Factors Matter Most for AI Infrastructure?

Traditional data center site selection prioritized factors like fiber connectivity, real estate costs, and proximity to user populations. AI infrastructure inverts these priorities entirely. Power availability determines site viability before any other consideration matters.

Organizations pursuing AI-ready infrastructure development must evaluate regions based on electricity generation capacity, transmission infrastructure, and utility relationships rather than conventional location factors.

Evaluating Regional Power Infrastructure

Data centers dedicated to training AI models are being built in more remote locations in the United States, such as Indiana, Iowa, and Wyoming, where power is still abundant and grids are less strained. This geographic shift reflects fundamental constraints in traditional data center markets.

Regions with robust power generation and transmission capacity offer significant advantages:

Texas provides direct access to both renewable generation and natural gas infrastructure through deregulated energy markets. The state’s grid operator has established clear interconnection processes specifically for large load applications.

The Southeast combines available generation capacity with utilities experienced in serving industrial loads. Coal plant retirements in the region are creating opportunities for data center development on sites with existing transmission infrastructure.

The Pacific Northwest offers abundant hydroelectric power and a history of serving energy-intensive industries. However, environmental regulations and permitting requirements can extend development timelines.

Why Proximity to Generation Matters

The distance between power generation and consumption directly impacts project economics and feasibility. Transmission constraints have created situations where abundant generation capacity exists but cannot reach load centers due to infrastructure bottlenecks.

Some forward-thinking organizations are pursuing strategies that bypass these constraints entirely. Direct connection to power generation eliminates transmission dependency and accelerates project timelines.

Similarly, sites with direct access to natural gas pipelines can support on-site generation that avoids grid interconnection queues altogether. This strategy requires different expertise but offers substantially faster paths to operation.

How Should You Approach Renewable Energy Integration for AI Workloads?

Sustainability commitments and economic pragmatism are converging around renewable energy for AI data center infrastructure. Organizations are increasingly pursuing renewable energy partnerships that provide cost predictability alongside environmental benefits. The challenge lies in matching renewable generation profiles with the constant power demands of AI operations, as training runs require uninterrupted power for extended periods unlike traditional workloads that can shift timing based on electricity availability.

The challenge lies in matching renewable generation profiles with the constant power demands of AI operations. Unlike traditional workloads that can shift timing based on electricity availability, AI training runs require uninterrupted power for extended periods.

Designing Hybrid Energy Solutions

Effective renewable energy strategies for AI data centers combine multiple generation sources with robust grid connections. Solar generation provides daytime capacity at increasingly competitive costs, while energy storage systems bridge gaps during peak demand periods.

Natural gas generation serves as both baseload power and backup capacity, ensuring AI operations never face power interruptions that could waste weeks of computational work. This hybrid approach balances sustainability goals with operational reliability.

Green hydrogen technology is emerging as another component of comprehensive energy solutions. Using renewable electricity to produce hydrogen fuel creates energy storage that can power generation during extended periods of low renewable output.

Securing Long-Term Power Purchase Agreements

Direct power purchase agreements with renewable energy developers provide price stability that traditional utility rates cannot match. These contracts lock in electricity costs for 10-20 years, protecting AI infrastructure investments from energy market volatility.

Structuring effective PPAs requires understanding both energy markets and AI operational requirements. The agreements must account for minimum power draws, growth projections, and geographic constraints while providing developers with bankable revenue streams that support project financing.

What Role Does Energy Campus Development Play in AI Infrastructure?

Energy campus development represents a fundamental shift in how organizations approach AI data center infrastructure. Rather than adapting existing facilities or competing for scarce urban power capacity, forward-thinking enterprises are creating purpose-built environments where energy infrastructure drives site design. This approach prioritizes power generation and transmission capabilities first, then builds computational facilities around available energy resources—the inverse of traditional development models.

This approach starts with land acquisition in regions where power generation capacity exists or can be developed efficiently. Sites with direct access to transmission infrastructure or natural gas pipelines offer immediate advantages over locations requiring extensive utility upgrades.

Understanding Powered Land Development

Powered land development transforms raw property into energy-rich sites capable of supporting massive computational loads. The process involves securing not just real estate but also the electrical infrastructure, utility agreements, and regulatory approvals necessary to deliver gigawatt-scale power.

Successful projects coordinate multiple workstreams simultaneously:

- Site Entitlement ensures zoning accommodates data center development and addresses local concerns about environmental impact, traffic, and community benefits.

- Utility Coordination establishes relationships with transmission operators and generation developers, aligning project timelines with infrastructure availability.

- Transmission Access secures grid interconnection points capable of delivering required power loads without extensive upgrades that could delay projects by years.

- Generation Development creates on-site or nearby power generation tied directly to data center operations, reducing transmission dependency.

Planning for Scalable Growth

AI infrastructure needs evolve rapidly as organizations refine their computational requirements. Energy campuses designed for scalability can accommodate growth without requiring complete redesigns or triggering new interconnection processes.

This flexibility requires anticipating future power needs that may seem excessive by current standards. Organizations that secure interconnection capacity for 500 MW today position themselves to scale operations as AI workloads grow, while those pursuing incremental approaches face returning to interconnection queues that have only lengthened.

How Do Grid Modernization Requirements Affect Your Planning?

Goldman Sachs Research estimates that about $720 billion of grid spending through 2030 may be needed to support projected data center growth. This massive infrastructure investment creates both challenges and opportunities for organizations planning AI facilities.

Traditional grid infrastructure was designed for predictable load growth measured in single-digit percentages annually. AI-driven demand is growing at multiples of historical rates, overwhelming planning assumptions and forcing utilities to fundamentally rethink capacity expansion.

Navigating Utility Planning Cycles

Utility planning operates on timelines that seem glacial compared to technology development. Integrated resource plans typically look 10-20 years forward, updating every few years to incorporate changing demand projections. This cadence worked adequately when load growth followed predictable patterns but creates serious mismatches with AI infrastructure needs.

Organizations cannot wait for utility planning cycles to accommodate AI infrastructure requirements. Proactive engagement with utilities, offering to fund infrastructure upgrades or providing guaranteed capacity commitments, can accelerate processes that would otherwise take years.

Exploring Alternative Grid Connection Strategies

When traditional interconnection paths prove too slow, alternative approaches merit consideration. Organizations are exploring on-site power generation to boost energy efficiency or resilience, with substantial interest in behind-the-meter power solutions.

Behind-the-meter generation using natural gas, hydrogen fuel cells, or hybrid renewable systems can provide immediate capacity while utility interconnections progress through approval processes. This strategy requires different expertise but offers substantially compressed timelines.

Microgrids represent another approach, particularly for organizations that can secure sites near existing power plants. Direct connection to generation sources eliminates transmission constraints while providing exceptional reliability for operations that cannot tolerate power interruptions.

What Infrastructure Components Require Special Attention?

Beyond the headline power requirements, several specific infrastructure elements require careful planning for AI workloads. Each represents a potential bottleneck that could constrain operations or require expensive retrofits if not addressed during initial design.

Electrical Distribution Systems

Power delivery within facilities must match the density requirements of AI infrastructure. Traditional data center electrical distribution designed for 10-15kW per rack cannot simply be upgraded to support 40-60kW loads—the physical infrastructure, from transformers to bus bars to circuit breakers, requires complete replacement.

Distribution voltage selection impacts both efficiency and flexibility. Higher voltage systems reduce transmission losses across longer distances within facilities but require more specialized equipment and training for operations staff.

Redundancy requirements for AI infrastructure often exceed traditional approaches. Training runs that represent weeks or months of computational investment cannot accept even brief power interruptions, driving requirements for truly fault-tolerant electrical systems.

Backup Power and Resilience Planning

AI workloads change the economics of backup power systems. Traditional data centers designed backup generators to support critical operations for hours during utility outages. AI facilities may require backup systems capable of sustaining full operational loads for extended periods.

This shift drives interest in backup systems that can also serve as primary generation under certain conditions. Natural gas generators dimensioned to provide full facility power can operate continuously when utility power is unavailable or economically unattractive, fundamentally changing infrastructure design.

Battery storage systems provide another layer of resilience while enabling participation in energy markets. Large-scale battery installations can absorb brief power quality issues, provide frequency regulation services that generate revenue, and bridge gaps until backup generators reach full capacity.

How Should Enterprises Evaluate Energy Infrastructure Partners?

Selecting the right energy infrastructure partners determines whether projects succeed or stall in interconnection queues for years. The evaluation process requires assessing capabilities far beyond traditional data center development expertise.

Critical Capabilities to Assess

Energy infrastructure development for AI workloads demands specialized expertise across multiple domains. Few organizations possess all necessary capabilities in-house, making partner selection critical to project success.

Land Development Experience in energy-intensive industries provides insights into site selection, utility relationships, and regulatory navigation that pure data center developers may lack.

Renewable Energy Integration capabilities ensure projects can meet sustainability commitments while maintaining operational reliability. This requires understanding generation technologies, energy storage systems, and grid interconnection processes.

Grid Interconnection Expertise navigating complex utility approval processes represents perhaps the most valuable capability. Partners with established utility relationships and understanding of regional grid operators can accelerate timelines by years.

Financial Strength to fund infrastructure development without requiring customer deposits or milestone payments demonstrates commitment and reduces project risk.

Understanding Development Timelines

Realistic timeline expectations prevent costly delays and missed market opportunities. Energy infrastructure development operates on schedules that often surprise technology executives accustomed to rapid cloud deployments.

Understanding these timelines enables realistic planning and helps organizations sequence AI infrastructure investments appropriately. Projects that attempt to compress timelines by skipping steps or rushing approvals almost invariably encounter delays that exceed the time saved.

What Financial Models Work for AI Infrastructure Development?

AI infrastructure requires different financial approaches than traditional data center investments. The capital intensity of energy campus development and long lead times between initial investment and operational status demand carefully structured financial models.

Evaluating Lease vs. Purchase Structures

Organizations face fundamental decisions about infrastructure ownership versus long-term leasing arrangements. Each approach carries distinct advantages depending on organizational priorities and financial constraints.

Long-term power and land leases spanning 10-25 years preserve capital for technology investments while providing operational predictability. These structures shift infrastructure development risk to specialized partners while ensuring access to required capacity as AI workloads scale.

Direct ownership provides maximum flexibility and potential cost advantages over extended periods. However, it requires substantial upfront capital and diverts management attention to infrastructure operations rather than core technology development.

Hybrid models combining elements of both approaches often provide optimal solutions. Organizations might lease land and basic infrastructure while directly funding generation capacity they can depreciate and potentially monetize through grid services or excess capacity sales.

FAQ

What power density should I plan for AI workloads?

Modern AI training infrastructure requires 40-60kW per rack, roughly three to four times traditional data center density. Plan for growth to 80kW+ per rack as GPU technology advances and AI models become more computationally intensive over the next 3-5 years.

How long does grid interconnection typically take?

Interconnection timelines vary dramatically by region, ranging from 18 months in areas with available capacity to seven years in constrained markets like Northern Virginia. Texas has streamlined processes that can reduce timelines to 24-36 months with proper planning and utility engagement.

Can renewable energy reliably power AI infrastructure?

Yes, when properly integrated with energy storage and backup generation systems. Successful renewable strategies combine solar or wind generation with battery storage for short-term bridging and natural gas generation for extended periods of low renewable output, ensuring AI operations never face power interruptions.

What makes powered land development different from traditional data center site selection?

Powered land development starts with identifying regions where gigawatt-scale power is available or can be developed, then builds energy campuses optimized for that capacity. This inverts traditional approaches that select sites based on fiber, real estate costs, or user proximity then struggle to secure adequate power.

Should we build our own energy infrastructure or lease from specialists?

Most enterprises benefit from partnering with energy infrastructure specialists who navigate utility relationships, grid interconnection processes, and renewable energy development. This preserves capital and management attention for technology investments while ensuring access to required power capacity with predictable long-term costs through structured lease agreements.

Are You Ready to Build AI-Ready Infrastructure?

The window for securing advantageous AI infrastructure positions is narrowing rapidly. Organizations that secure power capacity and begin development now will operate AI workloads while competitors remain stuck in interconnection queues. With 20% of planned data center projects at risk of delays, proactive planning separates leaders from followers in the AI race.

Success requires moving beyond traditional data center thinking toward comprehensive energy infrastructure strategies. This means evaluating sites based on power availability first, partnering with organizations that understand both renewable energy development and grid interconnection, and committing to timelines measured in years rather than months.

The digital infrastructure planning landscape is being redrawn around AI capabilities. Organizations that approach infrastructure as an enabler rather than a constraint position themselves to capture AI opportunities others will miss.

Hanwha Data Centers specializes in developing energy campuses designed specifically for AI workloads, combining powered land development with renewable energy integration and grid interconnection expertise. Our approach delivers the gigawatt-scale power infrastructure AI operations demand, with timelines and financial structures aligned to enterprise requirements. Contact us to discuss how we can support your AI infrastructure needs.