Key Takeaways

AI data centers require fundamentally different energy solutions than traditional computing facilities, with power densities reaching 150+ kW per rack and unprecedented 24/7 reliability demands.

- Power requirements have exploded exponentially: Individual AI training runs require hundreds of megawatts, with facilities like OpenAI’s Stargate project targeting 10 gigawatts total capacity across multiple data centers – equivalent to powering entire metropolitan areas

- Liquid cooling has become essential: Advanced cooling technologies now handle 35-40% of total energy consumption, with direct-to-chip and immersion systems providing the thermal management that air cooling cannot achieve for GPU densities exceeding 80 kW per rack

- Renewable integration is mission-critical: Major hyperscalers have committed billions to clean energy, with Amazon achieving 100% renewable energy in 2023 and Microsoft contracting 10.5 GW of renewable capacity through strategic partnerships

- Grid constraints are forcing geographic shifts: Traditional technology hubs face 5+ year wait times for power connections, driving development toward secondary markets with abundant renewable resources and available transmission capacity

Organizations must partner with energy developers who understand both renewable generation and advanced thermal management to succeed in this rapidly evolving landscape.

The artificial intelligence revolution has created an unprecedented energy crisis that could reshape the entire digital economy. As Goldman Sachs Research projects a staggering 165% increase in data center power demand by 2030, organizations worldwide are scrambling to secure the massive amounts of power for AI data centers that modern computing workloads require. This dramatic surge has transformed power infrastructure from a secondary consideration into the primary bottleneck limiting AI development and deployment.

The traditional power grid was never designed to handle the explosive deployment of AI infrastructure that can consume as much electricity as entire cities. Unlike conventional data centers that might peak at 30 MW, AI facilities routinely require 200+ MW, with some planned developments reaching multi-gigawatt scale. This unprecedented demand for power for AI data centers has created supply shortages in major technology markets worldwide.

MIT research indicates that processing a million tokens generates carbon emissions equivalent to driving a gas-powered vehicle 5-20 miles, while creating a single AI-generated image uses energy equivalent to fully charging a smartphone.

How Have AI Power Requirements Transformed Data Center Infrastructure?

The energy demands of artificial intelligence represent a fundamental departure from traditional computing workloads that have shaped data center design for decades. Where conventional servers might draw 200-400 watts per unit, AI processing requires sophisticated hardware configurations that consume dramatically more power while generating extreme heat that standard systems cannot handle.

GPU-Driven Power Density Revolution

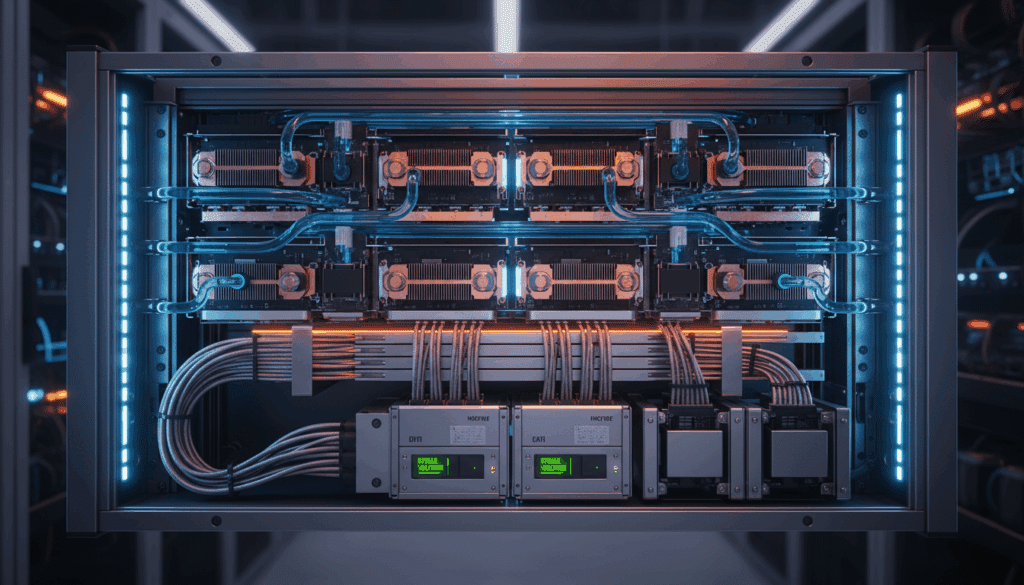

Modern AI workloads center around Graphics Processing Units (GPUs) that were originally designed for video rendering but have proven exceptionally capable for the parallel processing that AI requires. NVIDIA’s H100 chips, which power many current AI systems, consume up to 700 watts each, but the total power requirement per GPU reaches 1,200-1,400 watts when accounting for cooling, power conditioning, and supporting infrastructure. When deployed in typical AI configurations with 8-10 GPUs per server, racks can consume 80-150 kW compared to 10-15 kW for traditional enterprise server racks.

Next-generation processors push these requirements even further. NVIDIA’s upcoming GB200 systems are designed for 1,200 watts per chip, creating total rack-level power demands approaching 2,000 watts per GPU when fully configured. These power densities require liquid cooling systems and specialized electrical infrastructure that dramatically exceed what traditional data centers provide. The specialized nature of power for AI data centers demands entirely new approaches to electrical distribution and thermal management.

The synchronous nature of AI training creates additional complexity. Unlike web servers or databases that experience variable loads throughout the day, AI training runs operate thousands of GPUs simultaneously at maximum capacity for extended periods. RAND Corporation analysis suggests that individual AI training runs could require up to 1 GW in a single location by 2028, making scalable AI power a critical infrastructure requirement.

Understanding 24/7 Operational Requirements

AI operations cannot tolerate the brief power interruptions that other computing workloads might handle gracefully. Machine learning model training represents months of computational investment that can be completely lost due to power interruptions lasting just seconds. This requirement for absolute reliability has forced AI operators to implement redundant power systems, backup generation, and sophisticated energy storage that ensures continuous operation under all conditions.

Green data infrastructure has become essential not just for environmental compliance but for operational efficiency. Research shows that AI workloads could account for up to 21% of global energy demand by 2030 when delivery costs are included, making sustainable power sources both an environmental and economic imperative for long-term viability.

What Are the Six Critical Energy Solutions for AI Data Centers?

1. How Do Advanced Liquid Cooling Systems Enable Higher Power Densities?

Traditional air cooling systems have reached their physical limits for AI workloads, making liquid cooling essential rather than optional. Cooling systems represent 35-40% of total energy consumption in AI data centers, making thermal management a critical component of overall energy strategy.

Direct-to-chip cooling has emerged as the most practical solution for immediate deployment. Cold plates are mounted directly onto CPUs, GPUs, memory modules, and voltage regulators, with closed-loop systems circulating coolant to remove heat at the source. Modern direct-to-chip systems can remove 70-80% of heat loads directly at the processor level, reducing the burden on facility-level cooling infrastructure.

Immersion cooling takes the concept further by submerging entire servers in thermally conductive dielectric fluids. Two-phase immersion systems enable the coolant to vaporize upon absorbing heat and re-condense, transferring heat away with exceptional efficiency. Companies like Submer, Green Revolution Cooling, and Asperitas have deployed production-scale immersion solutions with measurable power savings and reliability improvements for high-density AI clusters.

2. Why Are Hybrid Renewable Energy Systems Essential?

The most effective energy solutions for power for AI data centers combine multiple renewable sources to ensure consistent power delivery that matches AI’s continuous operational requirements. Solar generation provides predictable daytime power, while wind resources often complement solar by generating electricity during evening hours when solar output decreases.

Goldman Sachs research indicates that wind and solar could serve roughly 80% of a data center’s power demand if paired with storage, but some form of baseload generation is needed to meet 24/7 demands. Advanced systems integrate both sources with large-scale Battery Energy Storage Systems (BESS) that provide rapid response capabilities and sustained power delivery during low-production periods.

These hybrid systems address the intermittency challenges that have historically limited renewable energy adoption for mission-critical applications. Smart inverters and grid-tied systems enable facilities to participate in energy markets and provide grid stability services, creating additional revenue streams while supporting the broader energy transition.

3. How Do On-Site Generation and Microgrids Enhance Reliability?

Leading AI operators implement energy solutions through carefully planned phases that balance immediate needs with long-term capabilities. Phase one typically focuses on securing adequate grid connections and implementing basic renewable energy procurement through Power Purchase Agreements (PPAs) that provide immediate access to clean energy. This systematic approach to securing power for AI data centers ensures operational continuity while building toward long-term sustainability goals.

Microgrids offer several advantages for AI applications, including reduced transmission losses, improved power quality, and the ability to customize electrical characteristics for specific equipment requirements. Some facilities implement DC microgrids that eliminate multiple power conversions, improving overall system efficiency by 5-10% compared to traditional AC distribution systems.

Advanced microgrid management systems use artificial intelligence to optimize energy flows in real-time, automatically balancing renewable generation, energy storage, and load demands to maximize efficiency while maintaining reliability standards. These systems can predict and respond to changes in computational workloads, weather patterns, and grid conditions.

4. What Role Do Advanced Energy Forecasting Tools Play?

Accurate energy forecasting has become critical for AI infrastructure planning as traditional demand prediction models prove inadequate for the explosive growth and unique characteristics of AI workloads. Modern forecasting tools combine artificial intelligence, real-time grid data, and market analytics to provide the predictive capabilities that energy planners require.

The International Energy Agency has launched a comprehensive Observatory that will gather the most comprehensive data worldwide on AI’s electricity needs while tracking cutting-edge applications across the energy sector. This platform’s AI agent enables users to interact with findings conversationally, making complex energy data more accessible to decision-makers across the industry.

Hitachi Energy’s Nostradamus AI platform represents one of the first AI forecasting solutions purpose-built for the energy industry. The cloud-based platform generates forecasts that are over 20% more accurate than traditional methods for solar and wind generation, market pricing, and load predictions, enabling more precise renewable energy procurement and better coordination between computational workloads and energy availability.

5. How Does Smart Grid Integration Enable Dynamic Load Management?

AI data centers are evolving from passive energy consumers to active grid participants that can communicate with utility systems, respond to grid conditions, and even supply power back to the network during emergencies. Advanced metering infrastructure provides real-time visibility into energy consumption patterns, enabling operators to identify optimization opportunities and respond to dynamic pricing signals.

Load balancing capabilities allow facilities to shift computational workloads based on energy availability and cost. During periods of high renewable generation, facilities can increase processing intensity for non-time-sensitive tasks like AI model training. Conversely, during grid stress events, facilities can temporarily reduce loads while maintaining critical operations through energy storage systems.

Demand response programs provide additional revenue opportunities while supporting grid stability. AI data centers can participate in these programs by temporarily reducing power consumption during peak demand periods, earning payments from utilities while helping prevent grid overloads.

6. Why Is Geographic Distribution Becoming a Strategic Advantage?

The most sophisticated AI operators are implementing geographic distribution strategies that optimize renewable energy access while reducing grid stress and development risks. Rather than concentrating facilities in traditional technology hubs that face power constraints, these strategies place computing resources near abundant renewable generation sources.

Texas offers exceptional wind resources and independent grid operations that facilitate renewable energy procurement. The Southwest provides outstanding solar potential with supportive state policies for clean energy development. Secondary markets like Ohio, Wyoming, and Indiana are experiencing unprecedented investment as organizations follow available power rather than traditional proximity considerations.

This geographic diversification maximizes renewable energy utilization while providing operational flexibility during extreme weather events or grid disturbances. Energy campus development in these markets can significantly reduce project timelines compared to power-constrained primary markets.

| Solution Category | Primary Benefit | Implementation Timeline | Energy Impact |

| Liquid Cooling Systems | 40% energy reduction for cooling | 12-24 months | Direct operational savings |

| Hybrid Renewable Systems | 80% clean energy capacity | 18-36 months | Long-term cost stability |

| On-Site Generation | Energy independence | 24-48 months | Enhanced reliability |

| Smart Grid Integration | Dynamic optimization | 6-18 months | 15-25% efficiency gains |

Real-World Case Studies: How Industry Leaders Address AI Energy Challenges

What Is OpenAI’s Revolutionary Stargate Initiative?

OpenAI’s ambitious Stargate project represents the most audacious attempt to address AI energy requirements at unprecedented scale. The initiative plans to develop up to 10 gigawatts of total capacity across multiple data centers, with each major facility targeting several gigawatts of power – equivalent to the electricity consumption of major metropolitan areas like New Hampshire.

The project’s scale has forced OpenAI to explore alternative power sources including nuclear energy, with discussions around Small Modular Reactors (SMRs) and partnerships with utility companies. The company’s approach demonstrates how leading AI organizations are thinking beyond traditional grid connections toward comprehensive energy partnerships that can deliver gigawatt-scale power reliably and sustainably.

How Has Microsoft Pioneered Nuclear Partnerships for AI?

Microsoft has emerged as a leader in innovative energy procurement for AI infrastructure, contracting 10.5 gigawatts of renewable capacity through partnerships with Brookfield Asset Management and other major energy developers. The company’s approach integrates renewable energy planning directly into data center site selection, prioritizing locations with strong renewable resource potential over traditional proximity considerations.

Microsoft’s recent agreement with Constellation Energy to restart the Three Mile Island nuclear reactor exemplifies the company’s comprehensive approach to scalable AI power. The 835-megawatt facility will provide carbon-free power exclusively for Microsoft’s data centers starting in 2028, demonstrating how major AI operators are securing long-term power supplies through innovative partnerships.

The company has also pioneered multi-data-center training techniques that distribute AI workloads across geographically dispersed facilities. This approach reduces power concentration at individual sites while maximizing renewable energy utilization across different regions with varying generation profiles.

How Is Meta Transforming Its Infrastructure for AI?

Meta’s evolution from a traditional social media company to an AI-focused organization illustrates the massive infrastructure transformation that AI adoption requires. The company operates 650,000 H100-equivalent GPUs and has committed to dramatic expansions of both computing capacity and renewable energy procurement to support next-generation AI applications.

Meta’s approach emphasizes green data infrastructure through comprehensive renewable energy partnerships. The company has become one of the largest corporate purchasers of renewable energy globally, with long-term Power Purchase Agreements (PPAs) supporting new solar and wind projects worldwide. These agreements provide the price stability and environmental compliance that Meta requires while supporting the development of new renewable generation capacity.

The company has also invested heavily in advanced cooling technologies and power optimization systems that maximize computational output per unit of energy consumed. Meta’s custom AI chips and optimized software stacks demonstrate how organizations can address energy challenges through both infrastructure improvements and technological innovation.

What Implementation Strategies Work Best for AI Energy Solutions?

Successfully implementing energy for AI requires a systematic approach that addresses both immediate operational needs and long-term scalability requirements. The most effective strategies combine multiple energy solutions while maintaining the flexibility to adapt as AI workloads and energy technologies continue evolving.

Phased Development Approach

Leading AI operators implement energy solutions through carefully planned phases that balance immediate needs with long-term capabilities. Phase one typically focuses on securing adequate grid connections and implementing basic renewable energy procurement through Power Purchase Agreements (PPAs) that provide immediate access to clean energy.

Phase two involves deploying on-site renewable generation and energy storage systems that reduce grid dependence while providing enhanced reliability. Solar arrays and wind turbines can be constructed relatively quickly compared to grid infrastructure upgrades, making them attractive options for organizations facing immediate power constraints in traditional markets.

Phase three integrates advanced systems including microgrids, smart energy management platforms, and grid services capabilities that optimize both energy costs and reliability. These sophisticated systems require significant investment but provide long-term operational advantages and revenue opportunities through participation in energy markets.

Cooling System Integration Considerations

Successful energy implementations require careful coordination between renewable generation, energy storage, and thermal management infrastructure. Liquid cooling systems must be sized appropriately for both energy efficiency and performance requirements, while power conditioning equipment must handle the complex electrical characteristics that AI workloads create.

Advanced liquid cooling systems can be designed to work synergistically with renewable energy systems, using excess cooling capacity to provide thermal energy storage or grid services during peak demand periods. Direct-to-chip cooling solutions offer the most practical balance of efficiency and implementation complexity for existing facilities.

Strategic Partnership Requirements

The complexity and scale of AI energy requirements make strategic partnerships essential for most organizations. Energy campus developers who specialize in renewable energy integration can provide comprehensive solutions that address land acquisition, zoning approvals, utility coordination, and ongoing operational support.

These partnerships become particularly valuable when navigating the extended timelines required for grid connections and renewable development. Partners with established relationships can often accelerate permitting and construction processes that might otherwise delay critical AI infrastructure deployment.

| Implementation Component | Timeline | Key Benefits | Complexity Level |

| Grid Connection & PPAs | 6-18 months | Immediate clean energy access | Medium |

| On-site Solar & Storage | 18-36 months | Energy independence | Medium-High |

| Liquid Cooling Systems | 12-24 months | Thermal efficiency gains | Medium |

| Microgrid Integration | 24-48 months | Maximum resilience | High |

What Emerging Technologies Will Shape AI Energy’s Future?

The trajectory of AI development and energy technology suggests that current infrastructure challenges represent just the beginning of a fundamental transformation in how society generates, distributes, and consumes electricity. Green data infrastructure will become the standard rather than the exception as AI applications become more prevalent and energy costs continue rising.

Small Modular Reactors and Advanced Nuclear

Several emerging technologies show promise for addressing the long-term energy challenges that AI development creates. Small Modular Reactors (SMRs) could provide the carbon-free baseload power that AI facilities require, though commercial deployment remains several years away. Microsoft’s Three Mile Island partnership demonstrates the potential for nuclear energy to provide the reliable, large-scale power that AI operations demand.

Enhanced geothermal systems offer the potential for 24/7 renewable generation in geographic areas previously unsuitable for traditional geothermal development. These systems could provide consistent baseload power while maintaining the environmental benefits of renewable energy generation.

Green Hydrogen and Long-Term Storage

Green hydrogen production represents another promising avenue, particularly for long-term energy storage and backup power applications. Facilities can use excess renewable generation to produce hydrogen that provides carbon-free power during extended periods of low renewable generation, creating truly self-sufficient energy ecosystems.

Advanced battery chemistries beyond lithium-ion are showing promise for longer duration storage and improved energy density. Flow batteries and other technologies could provide the multi-day storage capacity that enables facilities to operate independently of grid power for extended periods.

AI-Driven Energy Optimization

Artificial intelligence itself is becoming a critical tool for optimizing energy consumption and management. Machine learning algorithms can predict energy demand patterns, optimize renewable generation utilization, and automatically adjust facility operations to minimize energy consumption while maintaining performance standards.

These systems can coordinate across multiple data centers to balance loads based on regional renewable generation patterns, weather forecasts, and computational requirements. Advanced optimization could enable facilities to achieve net-zero energy consumption by perfectly balancing renewable generation with computational demand.

How Should Organizations Choose Strategic Energy Partners?

The unprecedented scale and complexity of AI energy requirements make strategic partnerships essential for most organizations. The most successful approaches combine energy expertise, infrastructure development capabilities, and long-term operational support under comprehensive partnership agreements that can adapt to evolving requirements.

Energy Campus Development

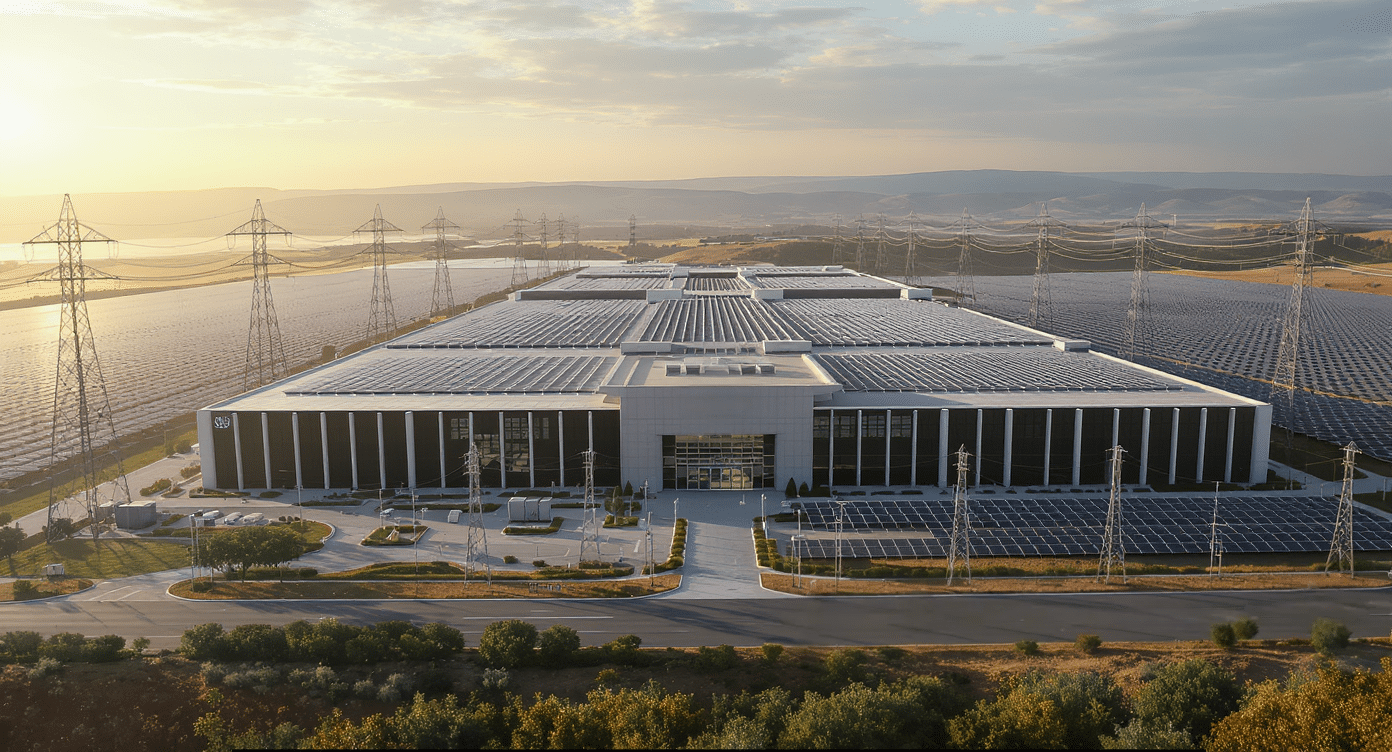

Forward-thinking organizations are moving beyond traditional data center development toward integrated energy campuses that combine renewable generation, energy storage, and computing infrastructure on optimized sites. These campuses provide energy independence, enhanced reliability, and the ability to participate in energy markets while supporting AI workloads. The integrated approach to power for AI data centers through energy campus development represents the most scalable solution for meeting long-term infrastructure demands.

Energy campus developers handle the complex coordination required between land acquisition, renewable energy development, utility partnerships, and data center construction. This integrated approach can reduce development timelines by 2-3 years compared to pursuing each component separately, while ensuring optimal coordination between energy and computing infrastructure.

Technical Integration Capabilities

Effective energy partners must demonstrate deep understanding of both renewable energy systems and AI infrastructure requirements. This includes expertise in liquid cooling integration, power quality management for sensitive AI equipment, and the electrical distribution systems required for high-density GPU clusters.

Partners should provide comprehensive monitoring and optimization services that can adapt to changing AI workloads and energy market conditions. These capabilities become increasingly important as facilities scale and energy management becomes more complex.

Long-Term Operational Support

The most strategic partnerships extend beyond initial construction to provide ongoing operational support and expansion capabilities. As AI workloads grow and energy technologies evolve, partners should be positioned to scale renewable generation and infrastructure capacity seamlessly while maintaining operational excellence.

These partnerships also provide access to emerging energy technologies and grid services opportunities that can offset operational costs while supporting the transition to a more sustainable energy system. The best partners serve as strategic advisors for navigating the rapidly evolving intersection of AI and energy infrastructure.

Frequently Asked Questions

How much power do modern AI data centers actually require? Modern AI data centers require 3-5 times more power than traditional facilities, with individual GPUs consuming 700-1200 watts plus cooling and infrastructure overhead. Large AI training facilities can require hundreds of megawatts, while planned developments like OpenAI’s Stargate project aim for 10 gigawatts total capacity across multiple facilities.

What liquid cooling solutions work best for AI workloads? Direct-to-chip cooling systems are currently the most practical solution, providing 70-80% heat removal efficiency while balancing implementation complexity and cost. Immersion cooling offers superior thermal performance but requires specialized infrastructure. Hybrid systems combining both approaches are emerging for maximum efficiency.

How long does it take to secure adequate power for AI data centers? Traditional grid connections for AI-scale facilities typically require 3-7 years from planning to operation, including utility coordination, permitting, and infrastructure construction. Energy campus development with integrated renewable generation can sometimes reduce these timelines while providing enhanced reliability and cost stability.

What role does geographic location play in AI energy planning? Geographic location is increasingly critical for AI energy planning. Texas offers exceptional wind resources, the Southwest provides outstanding solar potential, and emerging markets provide streamlined regulatory environments. Strategic site selection based on renewable energy access can significantly improve project economics and reduce development timelines.

How do advanced forecasting tools improve AI energy management? AI-powered forecasting tools provide over 20% more accurate predictions than traditional methods for renewable generation, load patterns, and market conditions. These systems enable better coordination between computational workloads and energy availability, optimizing both cost and sustainability while maintaining reliability standards.

Building Tomorrow’s AI Infrastructure Today

The energy challenges facing AI data centers represent both the greatest obstacle and the most significant opportunity in the technology sector’s evolution toward artificial intelligence. Organizations that develop comprehensive energy strategies combining renewable generation, advanced cooling systems, and strategic partnerships will gain decisive competitive advantages in reliability, costs, and environmental compliance.

Success requires moving beyond traditional approaches toward integrated energy solutions that treat power as a strategic asset rather than a utility service. The companies that recognize this transformation and act decisively will position themselves to capitalize on AI’s potential while supporting the sustainable energy transition that society requires.

Hanwha Data Centers delivers the comprehensive energy campus development and renewable integration expertise that next-generation AI infrastructure demands, combining technical excellence with financial strength to power tomorrow’s digital economy. Contact us today to explore how our proven approach can accelerate your AI infrastructure goals.