Key Takeaways

The AI infrastructure carbon footprint represents one of the fastest-growing emissions sources globally, demanding immediate infrastructure planning and renewable energy integration.

- Global data center power consumption will exceed 945 terawatt-hours by 2030, with AI operations growing from 5-15% to 35-50% of total consumption

- Training a single large language model generates 552 tons of carbon dioxide while consuming enough electricity to power 120 homes for a year

- Annual data center emissions will rise from 200 million to 600 million metric tons by 2030, driven primarily by AI infrastructure expansion

- Strategic site selection, integrated renewable energy campuses, and advanced efficiency measures provide proven pathways to net-zero data center operations

The artificial intelligence revolution has arrived with an environmental price tag that most organizations are only beginning to understand. Every ChatGPT query, every AI model training session, every generative AI application running in the cloud contributes to a rapidly expanding carbon footprint that threatens to undermine global climate goals. According to the International Energy Agency, data center electricity consumption will more than double between 2024 and 2030, reaching 945 terawatt-hours annually. This explosive growth in AI power demand transforms energy infrastructure from a technical consideration into a strategic imperative.

The scale of this challenge extends beyond simple electricity consumption. The AI infrastructure carbon footprint encompasses everything from the concrete poured for facility foundations to the diesel generators providing backup power during grid outages. Major technology companies have watched their emissions climb despite ambitious sustainability commitments. Understanding this complete environmental impact requires examining both the operational emissions from running AI systems and the embodied carbon from building the infrastructure that houses them.

Why Does AI Infrastructure Generate Such High Carbon Emissions?

The computational requirements of artificial intelligence create energy demands that dwarf traditional data center operations. Modern GPU clusters powering AI workloads consume significantly more power per rack compared to conventional servers. This dramatic increase in power density translates directly into higher carbon emissions unless powered by clean energy sources.

Training a single large language model like GPT-3 consumes approximately 1,287 megawatt-hours of electricity, enough to power 120 average homes for an entire year. Research from MIT indicates this training process generates roughly 552 tons of carbon dioxide emissions. These figures represent only the initial training phase, with ongoing inference operations adding continuous energy consumption as millions of users interact with AI applications daily.

The power requirements extend beyond the computing hardware itself. Cooling systems account for a substantial portion of total data center electricity consumption, with AI facilities requiring sophisticated thermal management to prevent expensive GPU hardware from overheating. Traditional air cooling cannot handle the extreme heat generation from high-density AI racks, forcing operators to deploy advanced liquid cooling systems that consume additional energy.

Grid infrastructure presents another significant challenge. Most regional power grids were designed decades ago for industrial and residential loads that vary predictably throughout the day. AI data centers demand sustained, consistent power that never drops below peak levels, creating stress on transmission systems and often requiring utilities to deploy fossil-fuel generators to absorb fluctuations and maintain grid stability.

| AI Infrastructure Component | Carbon Impact Source | Typical Contribution |

| GPU Computing Operations | Electricity consumption during training and inference | Primary operational emissions source |

| Cooling Systems | Continuous thermal management energy use | Substantial portion of facility electricity |

| Backup Power Systems | Diesel generators during grid outages | Variable based on grid reliability |

| Construction Materials | Embodied carbon in steel, concrete, equipment | Significant upfront emissions |

How Does Construction Contribute to the AI Infrastructure Carbon Footprint?

While operational emissions receive most attention, the physical construction of data center facilities generates massive carbon footprints before a single AI model ever runs. The embodied emissions from steel, concrete, and IT hardware represent a substantial portion of a facility’s total lifetime environmental impact.

Manufacturing the GPUs that power AI operations creates its own environmental burden. Market research indicates the three major GPU producers shipped 3.85 million units to data centers in 2023, up from 2.67 million in 2022, with 2024 shipments expected to show even greater growth. Producing these advanced processors requires significantly more energy than fabricating simpler chips due to complex manufacturing processes.

The environmental implications extend to raw material extraction. Mining and processing the rare earth elements, silicon, and other materials used in GPU production often involves intensive procedures that generate substantial emissions throughout the supply chain. These impacts compound the carbon footprint of AI infrastructure long before any electricity flows through the finished equipment.

Strategic site selection and infrastructure planning decisions made during early development phases ultimately determine whether facilities can achieve sustainable operations. Organizations that fail to integrate renewable energy considerations from the outset often find themselves locked into carbon-intensive operations for decades.

What Are the Primary Emissions Sources in AI Data Centers?

Understanding the complete picture of the AI infrastructure carbon footprint requires examining emissions across multiple categories. Direct operational emissions from electricity consumption represent the most visible source, but comprehensive carbon accounting reveals a more complex reality.

Scope 1 Emissions: Direct Facility Operations

On-site fuel combustion from backup diesel generators and any natural gas used for facility heating or combined heat and power systems falls into this category. While typically representing a smaller portion of total emissions during normal operations, these sources can spike dramatically during extended grid outages or in regions with unreliable power infrastructure.

Scope 2 Emissions: Purchased Electricity

The carbon intensity of grid electricity varies dramatically by location and time of day. A data center in a coal-heavy grid generates far more emissions per kilowatt-hour than an identical facility powered by hydroelectric dams or renewable sources. Geographic distribution of AI servers creates notable variation in projected emissions based on local grid characteristics.

Scope 3 Emissions: Supply Chain and Lifecycle Carbon

This category includes emissions from manufacturing computing equipment, transporting materials and products, employee commuting, business travel, and eventually disposing of or recycling equipment at end-of-life. These indirect emissions can equal or exceed direct operational emissions depending on facility design and procurement strategies.

Water consumption adds another environmental dimension to sustainable AI considerations. AI data centers require substantial water resources both for direct facility cooling and indirectly through the water consumed generating the electricity that powers operations, creating compound environmental impacts in water-stressed regions.

How Can Organizations Measure Their AI Infrastructure Carbon Footprint?

Accurate measurement forms the foundation for meaningful emissions reduction. Many organizations have historically relied on narrow metrics like Power Usage Effectiveness (PUE) that fail to capture the complete environmental impact of their operations. Recent regulatory developments and investor requirements are pushing toward more comprehensive measurement frameworks.

Emerging measurement frameworks take a more holistic approach to the AI infrastructure carbon footprint:

Life Cycle Assessment Methodology: This comprehensive analysis tracks emissions from raw material extraction through manufacturing, transportation, installation, operation, and eventual disposal. LCA reveals that for many facilities, construction and equipment manufacturing generate substantial cumulative emissions that must be considered alongside operational impacts.

Carbon Usage Effectiveness (CUE): This metric extends beyond simple energy efficiency to link power consumption directly with carbon emissions, measured in kilograms of CO2 equivalent per kilowatt-hour. CUE accounts for the carbon intensity of electricity sources rather than treating all power as environmentally equivalent.

Location-Based Accounting: This method uses the actual emissions from the local power grid where facilities operate. Rigorous carbon accounting provides transparent baselines for comparing facilities and tracking progress toward sustainability goals without relying on questionable offset mechanisms.

Federal agencies are developing standardized reporting requirements for AI data centers covering their entire lifecycle. The Department of Energy and Environmental Protection Agency have initiated efforts to establish metrics for embodied carbon, water usage, and waste heat that will provide consistent frameworks for environmental performance.

What Role Do Renewable Energy Solutions Play in Reducing Emissions?

Transitioning away from fossil-fuel power represents the most impactful strategy for addressing operational emissions. Major technology companies have emerged as the largest corporate purchasers of renewable energy globally, driven by both sustainability commitments and practical needs for reliable, scalable power sources.

Solar and wind generation offer increasingly cost-competitive alternatives to grid electricity. Large-scale solar installations now deliver power at prices that match or undercut traditional generation while providing predictable long-term pricing. Modern solar farms incorporate advanced tracking systems that maximize energy production throughout the day, with battery storage systems addressing intermittency concerns that previously limited renewable adoption.

Strategic partnerships between energy developers and data center operators are creating new models for sustainable AI infrastructure. Rather than simply purchasing renewable energy credits, leading organizations are co-developing facilities that integrate renewable generation directly with computing infrastructure. These energy campus approaches provide both cost stability and genuine emissions reductions.

Geographic distribution strategies enhance renewable integration by spreading AI workloads across regions with diverse energy resources. Facilities in Texas can leverage abundant wind and solar capacity, while operations in the Pacific Northwest access hydroelectric power and favorable cooling conditions. This geographic diversification reduces concentration risk while optimizing both cost and sustainability metrics for the AI infrastructure carbon footprint.

However, renewable energy alone cannot solve all challenges. AI operations require 24/7 reliability that intermittent solar and wind generation cannot provide without substantial energy storage capacity. Battery systems, emerging long-duration storage technologies, and hybrid approaches combining multiple renewable sources with strategic grid connections will be essential for achieving truly sustainable AI infrastructure.

5 Critical Strategies for Reducing the AI Infrastructure Carbon Footprint

Organizations serious about addressing their AI infrastructure carbon footprint must implement comprehensive strategies that tackle both operational and embodied emissions. These proven approaches provide pathways toward net-zero operations while maintaining the reliability and performance AI workloads demand.

1. Power-First Site Selection and Land Development

Traditional data center site selection prioritized proximity to population centers and existing telecommunications infrastructure. Sustainable AI development inverts this approach, beginning with power availability and renewable energy resources. Sites with abundant solar or wind potential, supportive utility partnerships, and available transmission capacity provide fundamental advantages for reducing the AI infrastructure carbon footprint.

Land preparation and entitlement processes significantly impact the embodied carbon of new facilities. Working with developers who understand renewable integration requirements from the initial planning stages prevents costly retrofits and ensures infrastructure can support clean energy systems. Strategic site selection considers grid interconnection capacity, availability of water resources for cooling, and regulatory environments that support sustainable development.

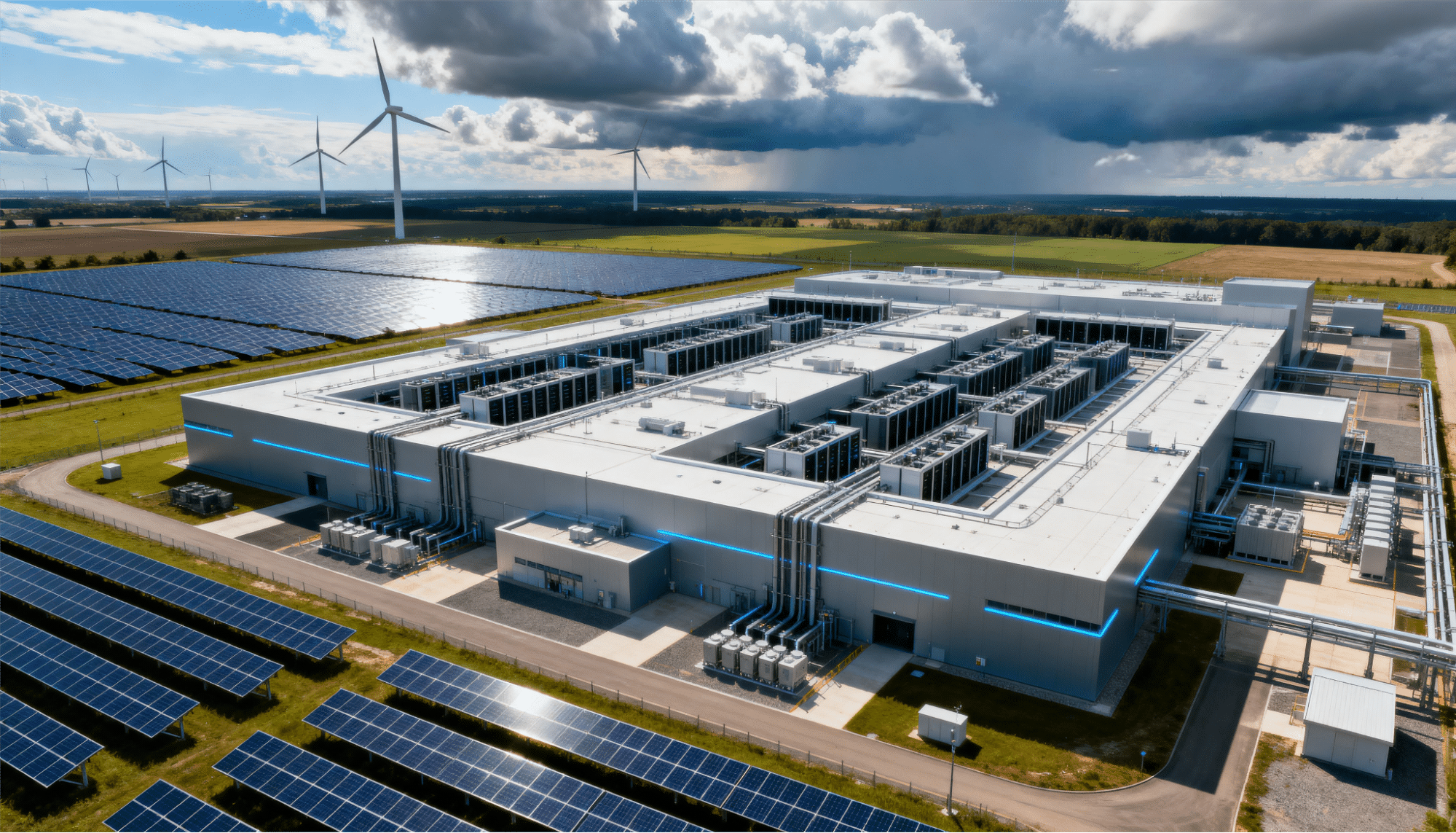

2. Integrated Renewable Energy Campus Development

Purpose-built facilities combining data centers with co-located solar arrays, wind generation, and battery storage systems represent the future of sustainable AI infrastructure. These integrated campuses can operate independently from the grid when needed while providing dedicated clean power that meets sustainability commitments without relying on questionable renewable energy credits.

Energy storage systems have evolved from supplementary technology to core infrastructure components. Large-scale batteries store excess renewable generation for use during peak demand periods, provide rapid response capabilities for maintaining power quality, and enable facilities to continue operations during grid maintenance or disruptions. Modern storage systems can support AI workloads for extended periods without diesel generator backup.

3. Advanced Energy Efficiency and Cooling Innovation

While renewable energy addresses the source of emissions, efficiency measures reduce the total power required and thereby minimize the AI infrastructure carbon footprint. Next-generation cooling technologies including liquid cooling and immersion systems handle extreme heat generation from high-density AI racks while consuming significantly less energy than traditional air cooling approaches.

Power management and intelligent workload scheduling allow AI operations to optimize processor utilization while maintaining performance. Machine learning algorithms can analyze facility operations in real-time to maximize energy usage efficiency, predict potential failures, and automatically adjust systems for optimal performance.

4. Sustainable Construction Materials and Methods

Material selection and construction methodology significantly impact facility emissions before operations begin. Some organizations have begun incorporating lower-carbon building materials, though supply chain limitations currently prevent widespread adoption. Standardized emissions measurements and disclosure requirements for key building materials will help drive innovation in sustainable construction practices.

Modular construction approaches reduce waste and enable more efficient manufacturing processes. Prefabricated components built in controlled factory environments typically generate fewer emissions than traditional on-site construction while offering faster deployment timelines. These methods also facilitate easier upgrades and modifications as AI infrastructure requirements evolve.

5. Comprehensive Measurement and Continuous Improvement

Organizations must move beyond narrow operational metrics to embrace full lifecycle carbon accounting. This transparency reveals the true environmental impact of infrastructure decisions and enables meaningful comparisons between different approaches to managing the AI infrastructure carbon footprint. Detailed tracking also identifies specific opportunities for emissions reduction that might otherwise remain hidden.

Establishing baseline measurements, setting reduction targets, and regularly monitoring progress creates accountability for sustainability goals. Goldman Sachs Research projects that data center power demand will increase 160% by 2030. Organizations that implement comprehensive carbon management today position themselves to meet increasingly stringent regulatory requirements and investor expectations.

| Strategy | Primary Impact | Emissions Reduction Potential |

| Power-First Site Selection | Enables renewable access | Determines long-term baseline |

| Integrated Energy Campus | Eliminates fossil dependency | Substantial renewable integration |

| Advanced Cooling Systems | Reduces operational energy | Significant cooling energy savings |

| Sustainable Construction | Lowers embodied emissions | Varies by material availability |

| Comprehensive Measurement | Addresses all emission sources | Enables targeted strategies |

What Regulatory and ESG Pressures Are Driving Sustainable Infrastructure?

Investors, regulators, and customers are demanding transparency and action on the AI infrastructure carbon footprint. The European Union’s AI Act will require large AI systems to report energy use, resource consumption, and other lifecycle impacts. International standards organizations are preparing sustainable AI standards for energy, water, and materials accounting.

In the United States, recent executive actions directed the Department of Energy to draft reporting requirements for AI data centers covering their entire lifecycle from material extraction through retirement. These requirements include metrics for embodied carbon, water usage, and waste heat that will force organizations to measure and disclose impacts they previously could ignore.

Financial markets increasingly price climate risk into investment decisions. ESG funds controlling trillions in assets now scrutinize the environmental performance of technology companies, with poor carbon performance directly impacting stock valuations and access to capital. Organizations that cannot demonstrate credible pathways to sustainable operations face higher borrowing costs and potential divestment by major institutional investors.

Corporate customers are also imposing requirements on their AI service providers. Enterprise agreements increasingly include sustainability clauses requiring specific renewable energy percentages or carbon intensity targets. Companies with ambitious climate commitments cannot meet their goals while relying on AI infrastructure powered by fossil fuels, creating market pressure for sustainable data center solutions throughout the supply chain.

Public scrutiny has intensified as AI’s environmental impact becomes more visible. Communities near proposed data center sites increasingly organize opposition based on concerns about power grid stress, water consumption, and local emissions. Projects that fail to demonstrate genuine sustainability commitments face permitting delays, regulatory challenges, and reputational damage that can derail development plans.

How Will the AI Infrastructure Carbon Footprint Evolve Through 2030?

Current projections paint a challenging picture for AI sustainability. Goldman Sachs Research forecasts that data center power demand will grow 160% by 2030. Morgan Stanley analysis indicates that annual data center emissions will rise from approximately 200 million metric tons in 2024 to 600 million metric tons by 2030, representing a tripling of the AI infrastructure carbon footprint driven primarily by generative AI expansion.

The outcome depends heavily on decisions organizations make today about infrastructure investment and energy procurement. The IEA projects that renewable generation will grow by over 450 terawatt-hours to meet data center demand through 2035, building on competitive economics and procurement strategies of leading technology companies. However, natural gas generation is also expected to expand significantly, particularly in markets where renewable development faces regulatory or infrastructure constraints.

Technology improvements offer reasons for optimism. Next-generation AI chips promise better performance per watt, with efficiency gains potentially moderating overall power consumption growth. More efficient algorithms and model compression techniques can reduce the computational resources required for training and inference. These advances may help moderate the AI infrastructure carbon footprint even as adoption accelerates.

Grid decarbonization rates will significantly influence overall emissions trajectories. Regions investing heavily in renewable generation and transmission infrastructure can support sustainable AI growth, while areas relying on aging fossil-fuel plants face worsening carbon intensity. This geographic variation will increasingly influence where organizations choose to locate new infrastructure investments.

The industry stands at an inflection point. Companies that establish comprehensive clean energy partnerships and integrated renewable infrastructure today will gain decisive competitive advantages in operational costs and carbon compliance as AI adoption accelerates. Those who delay risk facing capacity constraints, regulatory penalties, and reputational damage that could significantly limit their participation in the AI economy.

Frequently Asked Questions

What is the current AI infrastructure carbon footprint globally?

The IEA estimates that data centers currently account for approximately 1.5% of global electricity consumption. Morgan Stanley projects that annual emissions from data centers will rise from approximately 200 million metric tons in 2024 to 600 million metric tons by 2030, with AI operations representing a rapidly growing portion of this total as AI workloads expand from current levels to potentially 35-50% of data center consumption by decade’s end.

How does the carbon footprint of AI compare to other industries?

Data centers currently produce emissions at levels that are becoming increasingly significant in the global carbon picture. The IEA projects that data center emissions will reach 1-1.4% of total global CO2 emissions by 2030. Unlike many sectors expected to decarbonize, data centers represent one of the few areas where emissions are projected to increase substantially over the next decade alongside road transport and aviation.

Can AI infrastructure achieve net-zero emissions by 2030?

Achieving net-zero by 2030 is technically possible but requires substantial investment in renewable energy, energy storage, and efficiency improvements. Organizations must integrate clean energy strategies from the initial site selection and design phases rather than retrofitting existing facilities. Integrated renewable energy campus development offers the most viable pathway toward genuine net-zero operations without reliance on questionable offset mechanisms.

What percentage of new data center power demand will come from renewable sources?

The IEA projects that renewables will meet approximately half of the growth in data center electricity demand through 2035, with generation expanding by over 450 terawatt-hours. However, natural gas and other dispatchable sources will also play significant roles, particularly in regions where renewable development faces infrastructure or regulatory constraints. The actual renewable penetration will depend heavily on policy support and infrastructure investment decisions made over the next several years.

How do location decisions impact the AI infrastructure carbon footprint?

Geographic location dramatically affects carbon emissions due to variations in grid electricity sources, climate conditions affecting cooling requirements, and availability of renewable energy resources. Facilities in regions with high renewable penetration and favorable cooling climates can operate with substantially lower carbon intensity than those relying on fossil-heavy grids in hot climates. Strategic site selection represents one of the most impactful decisions organizations can make for long-term sustainability.

Ready to Build Sustainable AI Infrastructure?

The challenge of the AI infrastructure carbon footprint demands immediate action and strategic planning. As artificial intelligence reshapes industries and society, the companies that successfully integrate renewable energy solutions with digital infrastructure will define the sustainable future of computing. Organizations cannot afford to treat energy as a simple utility service while AI workloads push data centers toward unprecedented power consumption levels.

Hanwha Data Centers brings together the technical expertise, financial strength, and renewable energy focus required for next-generation sustainable infrastructure development. Our integrated approach combines strategic land selection, renewable generation capabilities, and comprehensive infrastructure planning to deliver AI-ready facilities that meet both performance and sustainability requirements. Contact us today to discover how our energy campus solutions can power your AI ambitions while minimizing your carbon footprint.